A Short History of Berkeley RISC

The 80s to today

David Andrew Patterson was born on the 16th of November in 1947 in Evergreen Park in Illinois. His family moved to California, and Patterson attended and graduated from South High School in Torrance. His high school sweetheart, Linda, grew up nearby in Albany. He then attended UCLA where he received his Bachelor’s in mathematics in 1969. A wrestler and a math major, he decided to try a computer programming course when his preferred mathematics course was canceled. This would prove to be a major moment for computing history as this random life event changed Patterson’s direction in both his academic and professional life. He once said:

even with punch cards, Fortran, line printers, one-day turn-around—I was hooked

Patterson received his Master’s in computer science in 1970. He and Linda married, and they had two sons. To support himself and his family while working toward his Ph.D, Patterson took a part-time job at Hughes Aircraft working on airborne computers. He received his Ph.D in 1976.

After his graduation, Patterson was hired by Berkeley’s computer science department. There, he worked with Carlo Sequin on the X-TREE project led by Alvin Despain, which was, according to him:

way too ambitious, no resources, great fun

In 1979, Patterson did a three month stint over at DEC working to fix microcode errors on a VAX minicomputer. During this time, he realized that simplifying the instruction set architecture would go a long way to reducing the number of microcode bugs. Patterson then brought that idea right back to Berkeley, where he and Sequin tasked computer science students with exploring a reduced instruction set. The result of this was RISC I (the working name was actually “Gold”).

RISC I was built of 44500 transistors. It had 31 instructions and its register file contained seventy eight 32 bit registers. The control and decode section of RISC I used just 6% of the die (typical designs of that era might use 50% for the same). The chip also had a two-stage instruction pipeline. The final design was sent to fabrication on the 22nd of June in 1981 and it was built using a 2 micron process. The chips were delayed multiple times, and the first working RISC I CPUs arrived at Berkeley in May of 1982 with first machine running on the 11th of June in 1982. RISC I wasn’t quite as performant as was expected. Some instructions ran quickly and others not so much. The suspicions were that the problems with the chips were physical and not logical. Yet, despite being slower than hoped, RISC I proved the theory of RISC quite adequately. RISC I was built on an already old process, but clocked at 4 MHz, it was roughly twice as performant as the 32-bit VAX 11/780 at 5 MHz, and roughly four times more performant than the Z8000 at 5 MHz. It turns out that the code density advantage of CISC mattered little to the overall performance of a computer.

In the early 1980s, there was a vibrant spirit of innovation surrounding silicon chip technologies. MIT, Xerox PARC, Berkeley, Stanford, Intel, Fairchild, and even the US government were all investing in semiconductor fabrication technologies, and things were moving quickly. Robert Kahn and Duane Adams had previously convinced DARPA to provide funding to Caltech for silicon projects, and they and DARPA had seen Lynn Conway’s success at MIT with computer aided design tools where she taught VLSI design by doing VSLI design. DARPA then made two investments in VLSI work. One of these was in UC Berkeley with RISC, and the other was at Stanford with MIPS. Both of these built off of the pioneering work of Conway’s (which really deserves its own article), and used CAD as a fundamental piece of the design toolset. The DARPA VLSI project also involved investments in graphics to make that CAD software better, and helped create the Sun workstation. DARPA felt that a UNIX operating system would be needed, and some of the funding for the VLSI project helped to create the Berkeley Software Distribution (BSD).

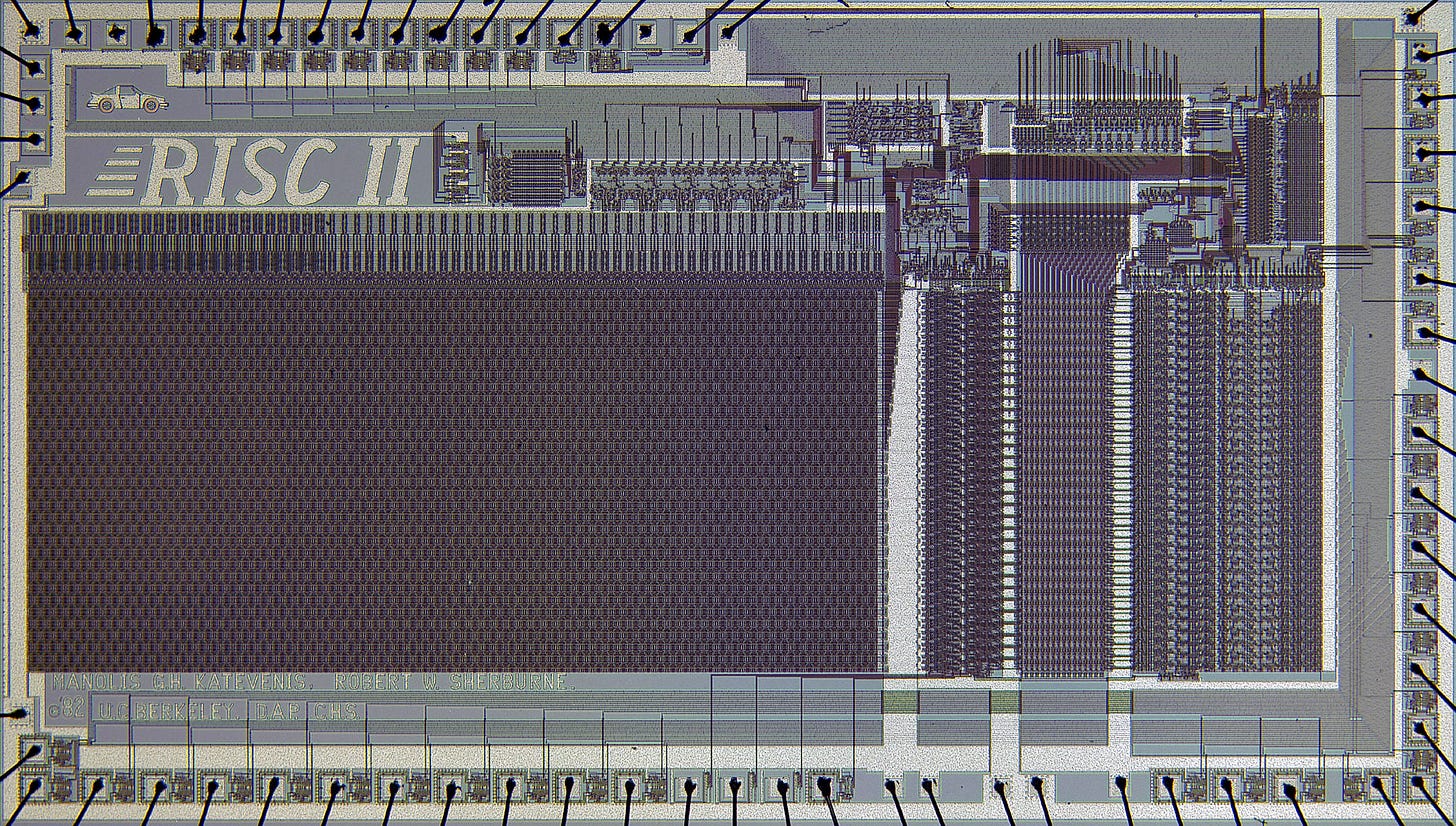

Work on the next RISC design, named Blue, began before the RISC I chips returned from fabrication. This time, Sequin was leading the project at Berkeley, and Patterson was concentrated on managing cooperation between Berkeley and DARPA. Blue became RISC II, and this design had simpler cache circuitry, was made of 40760 transistors, and had 138 registers. It was fabricated on a 3 micron NMOS process, and it ran at 3 MHz. RISC II was about three times faster than RISC I while being around half the size. That performance increase meant that RISC II competed well against VAX minicomputers with performance ranging from 85% to around 250% that of VAX. Against the Motorola 68000, RISC II did better still with performance being around 140% to over 400% that of the 68000.

The RISC II design was adopted (almost directly) by Sun Microsystems leading to the creation of SPARC. The success of SPARC encouraged the adoption of RISC-like ISAs across the UNIX workstation market. Suddenly, every vendor had to have a RISC offering: IBM with Power, DEC with Alpha, SGI with MIPS. UNIX workstations were RISC workstations.

RISC II was followed by SOAR in 1984 which was designed to run SmallTalk. It was fabricated with a 4 micron NMOS, was made of 35700 transistors, consumed 3 watts, and ran at 2.5 MHz.

In 1988, Berkeley made the SPUR chips. These were a chipset unlike other Berkeley RISC designs, and they were intended to run LISP. These were made of 293599 transistors on a 1.6 micron CMOS process, and ran at 10 Mhz achieving 3 Megaflops.

In 1990, Berkeley made VLSI-BAM (very large scale integration Berkeley abstract machine). This was back to a single chip, and this was one that ran Prolog. Fabrication for VLSI-BAM was 1.2 micron CMOS. It was made of 112000 transistors, and it ran at 20 MHz consuming 1 watt at 5 volts.

The rise of the UNIX workstations and the promise of RISC led many to believe that RISC would quickly win, that x86 would die, and that the WinTel dominion would end. This did not happen. Microsoft’s Windows had the applications people needed, and the rise of Intel’s 32 bit x86 chips gave people good performance far more cheaply than UNIX workstations. This combination had the added benefit of being backwards compatible with 16 bit software investments companies had already made. While they weren’t as powerful, they were powerful enough for most common tasks. As time progressed, Intel’s chips and Microsoft NT would actually take the performance crown. A combination of the dot com crash, Intel’s Itanium, and the creation of AMD64 put the nails in the coffins of both commercial UNIX workstations and of the architectures they utilized.

Yet, RISC wasn’t done. One player remained, ARM (not Berkeley RISC, but still RISC). ARM effectively kept RISC alive. The Apple A7 in the iPhone 5S in 2013 was an ARMv8 compliant chip with thirty one 64 bit general purpose registers, thirty two 128 bit floating point registers, 64K data cache, 64K instruction cache, a 1MB L2 cache, a 4MB L3 cache, clocked at 1.3 GHz, and built on a 28nm process. The A7 SoC also contained a PowerVR G6430 GPU that shared the L3 cache. This thing was also quite wide (for the time) with 6 decoders. It had four FPUs. It had two branch units, and two load store units. This was the return not just of powerful ARM chips, but a return of powerful RISC CPUs. The A7 delivered desktop-class performance at a fraction of the power consumption, a fraction of the heat creation, and in a smaller package.

Within Berkeley, however, RISC had never died.

In May of 2010, Professor Krste Asanović was working in Berkeley’s Parallel Computing Laboratory (Par Lab) where Professor David Patterson was the director. Par Lab had made the Chisel hardware construction language, and they had received $10 million (over the years 2008 to 2013) from Intel and Microsoft to advanced parallel computing. The State of California and DARPA had also made contributions. The money, the tooling, and the institutional knowledge of RISC provided ample resources for Asanović to start work on another RISC design. As with all other projects at Par Lab, the design would be open source under the terms of the BSD license. In this endeavor, Asanović worked with graduate students Yunsup Lee and Andrew Waterman, and together, the team first published the RISC-V ISA in 2011. In 2015, the RISC-V Foundation was established to maintain RISC-V, and in 2019 the foundation relocated to Switzerland.

RISC-V has been finding a home in various places: RISC-V cores act as controllers for newer Seagate drives, Google’s Titan M2 security module in the Pixel 6 and Pixel 7 phones use RISC-V cores, Western Digital uses RISC-V cores in their SSD controllers. There are some RISC-V computers available for purchase from the likes of SiFive, and there are also hobbyist RISC-V machines available from ClockworkPi, and StarFive. Many companies are heavily investing in RISC-V currently, and one can reasonably expect that RISC-V will grow in adoption over the next decade.

The WinTel vs UNIX/RISC battle is back. AMD and Intel machines running Windows are facing competition from a commercial UNIX-on-RISC workstation: Apple with macOS on ARM. The future may bring added competition from open source Linux on RISC-V. It’s an exciting time.