The History of GM-NAA I/O and SHARE

The Birth of Computer Operating Systems

Were a computer user of today to travel back in time to the 1950s and early 1960s, he/she would find computing to be completely alien. This was the era of mainframe machines. The first of these machines from IBM to be sold commercially was the 701 announced on the 21st of May in 1952. This machine competed with the UNIVAC 1103 that had been released one year prior.

The 701 was an amazing machine for the time. Built in Poughkeepsie, New York and weighing in at twenty thousand pounds, this thirty six bit word, vacuum tube monster could perform sixteen thousand addition/subtraction operations per second or roughly two thousand floating point multiplication or division operations per second. This floating point capability was made note of in press announcements. From IBM:

In preparation for the use of this machine by American industry, a staff of IBM scientists has been engaged for two years in planning the economical solution of typical problems. One result of this work is that users of the machine need no longer be concerned with tracing the position of the decimal point through problems involving thousands or millions of sequential arithmetical steps.

Using a "floating point" technique, the machine notes the position of the decimal point in the input numbers, keeps track of the point, and finally reports the position of the decimal point as the results are printed.

The 701 made use of seventy two cathode ray tubes (Williams tubes) for RAM providing four thousand ninety six words equivalent to roughly 18K. This could be doubled with a second set of seventy two tubes. These tubes were supplemented by magnetic drums and magnetic tapes. RAM could also be upgraded to magnetic core memory later in the decade. The machine could get input from punched cards, paper tape, or magnetic tape. Most customers never bought an IBM mainframe due to a cost in the millions; they rented them, and pricing for this machine started at $11900 per month (roughly $138000 in 2023, which would be around $1.6 million each year). For this money, not only did the customer receive the machine itself, but the machine would be installed and the operators of the machine would receive three weeks of instruction on the machine’s use. This was critical as these computers were not exactly user friendly and computers were still a very new technology.

In a rather sophisticated setup of an early mainframe computer, software engineers would write their programs on paper with pen or pencil. These programs would then be handed over to a keypunch professional would transfer the code onto cards. Those cards would then be fed into the card reader by the system operator, and the results would usually then be printed out and optionally stored on magnetic reel-to-reel tape. In installations without a card puncher, paper tape was the usual mechanism. In the most expensive installations, programs could be loaded onto magnetic tape and fed into the machine via that medium. Initially, there were no operating systems for these computers. A long queue would build up of programs to be ran, and the system operator would load one program, allow it complete, and then load the next program. That operator would essentially need to be present the entire time as were a hardware glitch or software glitch encountered there were no automatic restart processes, no on-line debuggers, and no method of automatically moving on to the next program as the queue was physical cards not yet loaded into the card reader. Between each job, an operator may be required to reconfigure the computer via plugboards. When a plugboard was inserted into an early mainframe computer, the plugs contacted sockets that were hardwired to card read brushes, card punch controls, internal counters, and other logic. These were essentially a form of reprogrammable configuration for the computer.

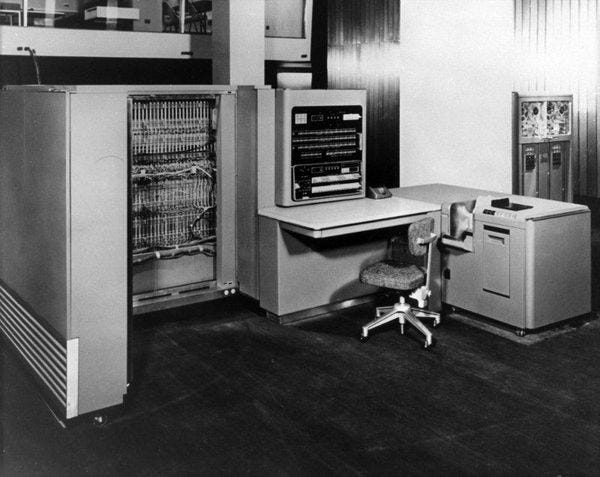

The seventh of nineteen total 701s produced was installed at General Motors in Fort Worth, Texas. This machine was capable of about 150000 operations per second, cost $23750 per month, and essentially occupied a forty foot square room. At GM, there wasn’t a dedicated system operator. Programmers lined up with punched card decks in hand, and as soon as one finished the next rushed up to load his/her cards. The programmer would then check that the correct plugboard was in the card reader, that the correct plugboard was in the printer, that the correct plugboard was in the punch, and he/she would then need to hang a tape (if one were to be used), punch in on the time clock, address the console, set any necessary switches, load the card deck into the reader, say a quick prayer to avoid a jam, and then finally hit the load button to start the bootstrap sequence. This whole process would take a minimum of five minutes if the programmer were really hustling, and program execution would usually start around five minutes after that. Any mistake in any part of this process whether the fault of the machine or the programmer could cost the programmer his/her time slot entirely due to constraints on machine time (referred to as accounting). To stress this pain point, the mean time to failure on the 701 was around thirty minutes. This was largely due to the memory type, but vacuum tubes are also prone to failure and machines such as these used a surfeit of them.

This setup presented a serious problem. The turn around time between jobs was lengthy, the computer was costly, and companies wanted to make the most of their investment. Yet, as many of us know today, technically inclined computer operators are wont more often than not to automate tedium. This automation began slowly and modestly. First, the plugboards were informally standardized. This eliminated one major time sink in getting setup for program execution. The next step was bootstrapping being moved from cards to magnetic tape which reduced that step from around three minutes to just ten seconds. Robert L. Patrick then came up with a rather unique idea. He chose to load each input record (his program’s data) to a unique address so that he could store an entire program on magnetic tape and just load patches, program modifications, and data through the card reader. He was then able to get four or five runs each day in one quarter of the total machine time of other programmers’ single runs. Patrick was also a Speedcoding user. This was a slightly-higher-than-assembly language, and was negatively viewed by purists. For purists, only assembly language would do, and even then they were using rather primitive assembly languages and would often resort to binary coding for certain routines. Despite this, Patrick was getting more done everyday. With so much time freed, he was able to make four observations that would optimize machine time.

Get programmers off of the console and out of the machine room.

Standardize on setup.

Avoid reloading by using magnetic tape and updating with a change deck.

Reuse code liberally wherever possible.

In 1954, the seventeenth 701 was being installed at General Motors Research Laboratories in Detroit. Patrick moved to that location around the same time, and brought his techniques with him. By 1955, Patrick’s techniques were merged with other efforts at GM and formalized into the General Motors I/O System (commonly referenced as GM I/O or GMR Monitor). This was a system of three phases. Batches of jobs would be made with regard to input formats, jobs would be run, and then the output processed for conversion to human readable form and for printing. The key bit of software in this process was the program Patrick had made on tape to run his programs, load modifications and data from cards, and handle output all without really worrying about the plugboards.

Around the middle of 1955, GM ordered an IBM 704. A team was assembled to plan the installation, and this team was led by George Ryckman with Patrick serving as the architect. The 704 was a faster machine than the 701 (roughly double), and it used core memory from day one in place of CRT memory. This one change resulted in the mean time to failure increasing to eight hours or so. This had two major impacts. First, it meant that the run time of a program could be far longer and therefore more complex and sophisticated. Second, it meant that more total work could be done as less time would be spent in maintenance tasks. The 704 also optionally featured a CRT display for output. Don’t think of a terminal, just a display for whatever output was useful in that format. Think of error output for example, but this was programmable.

All of this software and process development was occurring while IBM was starting a users’ group called SHARE. IBM distributed software to members of the group in source form. Members would make modifications and share their software resources to the benefit of all members. The first meeting of SHARE on the 22nd of August in 1955 was attended by 704 installation owners representing seventeen of the total nineteen installations: Boeing, Lockheed, California Research, Curtiss-Wright, General Electric, General Motors, Hughes Aircraft, IBM, North American Avaiation, NSA, Rand Corporation, United Aircraft, Douglas Aircraft, and University of California. There was a hope that someone would come up with a clever meaning for the name, but none was ever officially adopted. Meetings were held nearly each month as far as I can tell with the first in August and second in September largely concerning themselves with establishment of procedure and standards. The third meeting of SHARE was held in Boston on the 10th and 11th of November in 1955. This was the meeting that properly started the sharing of software and the sharing of hardware augmentations. At SHARE 3, GM proposed a new operating system for the IBM 704 that would improve upon GM I/O and take advantage of the new machine’s greater capabilities. North American Aviation took an interest and the two companies worked jointly on the project. The primary authors of the new OS were Owen Mock (NAA) and Robert L. Patrick (GM).

Fourteen months after the proposal at SHARE, the system had been implemented and documented. In this system, all input and output were processed offline on card to tape equipment. The input tape contained a file called SYSIN, and the output tape contained a file called SYSOUT. The computer would then operate tape-to-tape. SYSIN contained a batch of independent jobs each identified by a control card. A program to be run by a user was identified by a job card containing the programmer’s name and system accounting information, the cards following contained header information which controlled its conversion from decimal to binary as well as its subsequent processing. SYSIN was able to assemble a program, load it into memory, present it with data, and then record the results of both assembly and execution in a single pass of the machine. This process later became known as “load and go.” For a 704 running GM-NAA I/O, the plugboards could be essentially made permanent as all configuration would be done in software and not via board rewiring. Professional operators were then brought in and the programmers were taken out of the machine room. As the system provided several libraries to the programmer, programming time was decreased. Additionally some standard debugging tools were built in reducing total time spent on development. Regarding debugging, Patrick notes:

There were two versions of the original OS package because Mock and I could not agree on how debugging during the Compute phase was to be handled. The GM version had a run-time monitor which used a core map in memory which the programmer was obligated to maintain during execution. If a program failed to run to completion, the monitor used the core map to selectively dump memory in a meaningful format for return to the programmer. (Online traces were so inefficient they were seldom used and there was no attached terminal hardware available.) After a memory dump, the OS proceeded to the next job in the queue without stopping.

At GM, the the IBM hardware was supplemented by a binary, time-of-day clock designed and made by George Ryckman. The OS would then read the clock whenever a job card was read and the system would output the accounting information for any given job on a trailing page of the printout. Programmers were thereby made aware of the resources they used and the cost of those resources. This then encouraged a bit of self-discipline… Microsoft personnel might refer to this as “hardcore.”

Most of the code in the OS was spent on input and output translators with only a small amount devoted to actually supporting programmers during execution. As work on the system began in 1955, NAA developed the input translation and GM developed the output translation. In 1956, Roy Nutt’s symbolic assembler was added to the input translation system. This operating system was used on about forty 704s.

In SHARE’s first reference manual, it is stated the group devoted itself to the 704. Yet, at SHARE in December of 1956, the group began looking forward to the IBM 709. The members wanted to have software support for these machines (the first of which would be installed in August of 1958) upon delivery and the focus of the group then began to shift from the 704 to the 709. The 709 was in many ways similar to the 704. From Patrick again:

Its architecture could be described as a 704 with channels. In IBM parlance, a channel is a limited purpose computer that shares access to the memory bus with the CPU, and sits astride the information that flows between the memory and the set of input/output devices the customer has installed. When configured with a channel, the CPU and the channel are synchronized only for a few microseconds while the CPU instructs the channel what to do. Then the CPU is free to process data while the channel selects the device and transfers other data to/from memory.

Yet, despite being an iterative improvement over the 704, this was a new and different machine that was more complex than its predecessor. In addition to the channels, it also offered indirect addressing and three convert instructions which could handle things like decimal math in hardware instead of software. It was a still a vacuum tube machine that consumed 250kW and required nearly as much power to be used in cooling, but it featured thirty two thousand seven hundred sixty eight words of thirty six bit magnetic core memory. It was much faster being capable of around forty two thousand add/subtract operations each second. The machine was different enough from its predecessor that IBM offered an emulator of the 704 for the machine. This emulator handled registers and the majority of instructions in hardware with things like floating point and I/O routines being handed in software. The 709, however, was not to last. The first customer in 1958 was likely rather annoyed as that same year, IBM announced a transistorized version of the 709 dubbed the 7090. The use of transistors made the 7090 roughly six times faster than the 709. The first installation of a 7090 occurred in December of 1959. The price of this monster was $2.9 million (or around $31 million in 2023) purchased or $63500 per month rented (or $676000 in 2023) for a model with most of the available optional equipment.

To build out the software for the 709, SHARE established the 709 System Committee with Donald L. Shell of General Electric in Cincinnati as the Chairman. The other members were Elaine M. Boehm of IBM in New York, Ira Boldt of Douglas Aircraft in Santa Monica, Harvey Bratman of Lockheed in LA, Vincent DiGri of IBM in New York, Irwin D. Greenwald of Rand in Santa Monica, Maureen E. Kane of IBM in Poughkeepsie, Jane E. Kind of General Electric in Schenectady, Owen R. Mock of North America Aviation in LA, Stanley Poley of Service Bureau Corp in New York, Thomas B. Steel of System Development Corp in Santa Monica, and Charles J. Swift of Convair in San Diego. This group’s first product was named SCAT (SHARE Compiler, Assembler, Translator) which seriously improved debugging capabilities. Primarily, with SCAT, the computer’s error output was made human readable. After the creation of SCAT, there was a ton of debate about what to do, and this debate went on for quite some time. The eventual goals reached were to create an operating system that would be simple, support non-stop operation, the interchangeability of card and tape input, flexibility in programming, automatic error detection, and backwards compatibility with the 704 and GM-NAA I/O wherever possible. In the group’s newly established style, the planning phase of the OS took quite some time. By 1959, IBM had published some documentation about the SHARE Operating System 1.01 in which it stated that SOS had a three-fold purpose to provide assistance to the 709 programmer in software creation and preparation, to automate what can be automated, and to provide the computer installation with efficiency of operation and accurate accounting.

This system, from what I can find in manuals and from what Patrick had publicly stated, was a port of GM-NAA I/O with enhancements made to support channels. Additionally, SOS included the improved compiler/assembler/debugger package of SCAT, better job supervision, buffering support, and macros for input and output editors. This was, however, still a punched card oriented affair. SOS would check job control cards and call routines to handle the job. In the case of handling cards, the compiler (SCAT) would take human written code and “ensquoze” it. This squoze code was far less redundant and far less semantically sugared than the human written code from which it was generated, and it was optimized. This ensquozed result was then made into a group of punched cards called a squoze deck. These squoze decks would then be operated upon by the Modify and Load program which consisted of several files on the System tape. From the manual “when a particular file is needed, it is called into core from System tape, by either the Monitor or another file of Modify and Load and given control. When the file has performed its function, it may pass control to another file of Modify and Load or to the Monitor.” Modify and Load consisted of M1, MO, and M3 through M9. There was no M2. M1 was the bootstrap. When it finished its job, it would check for a mod package in the squoze deck, if none was present it would hand control of the machine to M4 otherwise MO would take control. MO basically ran a second pass of the compiler for any modifications ensquozing any new code. MO then calls M3. M3 basically takes the newly ensquozed code and readies it as had previously been done by the bootstrap process for already ensquozed stuff. M4 and M5 are essentially what we today would consider compilers and assemblers. M6 prints error messages. M7 concerned itself with card punching, M8 listed the complete program, and M9 printed catastrophic errors and returned control to the monitor. That’s a bunch of stuff, but what it boils down to is that Modify and Load was used to either make a fully compiled and assembled machine language deck (referred to as an absolute deck) or to transfer the machine language code to tape, provide a listing of the program, or to punch a new squoze deck (in the case of modifications). When a program was actually running, SOS had the SNAP debugger which would accumulate relevant information during the course of program execution. During the program’s output phase, SNAPTRAN would handle the work of making that accumulated debugging information human readable.

The input of data to a program was first handled by the Input Editor, and then passed to INTRAN which converted input to binary encoding. Output was similarly handled by the Output Supervisor for conversion and formatting, and then made human readable by OUTRAN for off-line presentation.

After the initial release of SOS, support for FORTRAN and LISP was added. I have seen some reports of FORTRAN being available for GM-NAA I/O as well, but I wasn’t able to determine whether or not this is true. While it is generally noted that SOS was a derivative work of GM-NAA I/O which was itself a derivative of GM I/O, SOS was far more elegant, far more complex, and far more powerful. Many things that were done in a formalized, off-line, and manual process were automated and integrated into the functions and routines of SOS. Essentially, standard operating procedures for humans became software. GM-NAA I/O and SOS took a ton of repetitive work away from programmers and automated it, and it is in GM-NAA I/O and in SOS that we see the first hints at what was to come with mainframe and minicomputer operating systems. SOS was used from late 1958 until roughly the mid 1970s, but IBM discontinued support for the system in 1962. Its replacement was IBSYS first intended for the IBM 709, 7090, and 7094 and completely incompatible with the SHARE Operating System. The most enduring legacy of SHARE, however, wasn’t entirely technological. SHARE was the first time that an open source development model was ever employed (as far as I can tell), and it was quite successful.

Unlike most of my articles to date, I doubt very much that any of my readers witnessed any part of this article. Some of you, however, have worked at the companies mentioned and may have some second hand knowledge of events, or first hand knowledge of the hardware and software systems, all corrections to the record are welcome. Below are three of the manuals I found most interesting.