We no longer call them microcomputers

a brief tour of why

Determining what machine is the first computer is somewhat difficult. Was it the difference engine, the abacus, the Atanasoff-Berry computer? The problem is the term. The first electronic, digital, programmable computer was most likely the Colossus Mark II in 1944. This still isn’t a general purpose computer, and therefore isn’t what we think of as a computer today. Colossus was used to break German encryption in WWII and not to do general computational tasks. The first electronic, programmable, digital, general purpose computer (that is, a computer we would think of today) was more than likely the ENIAC in 1945.

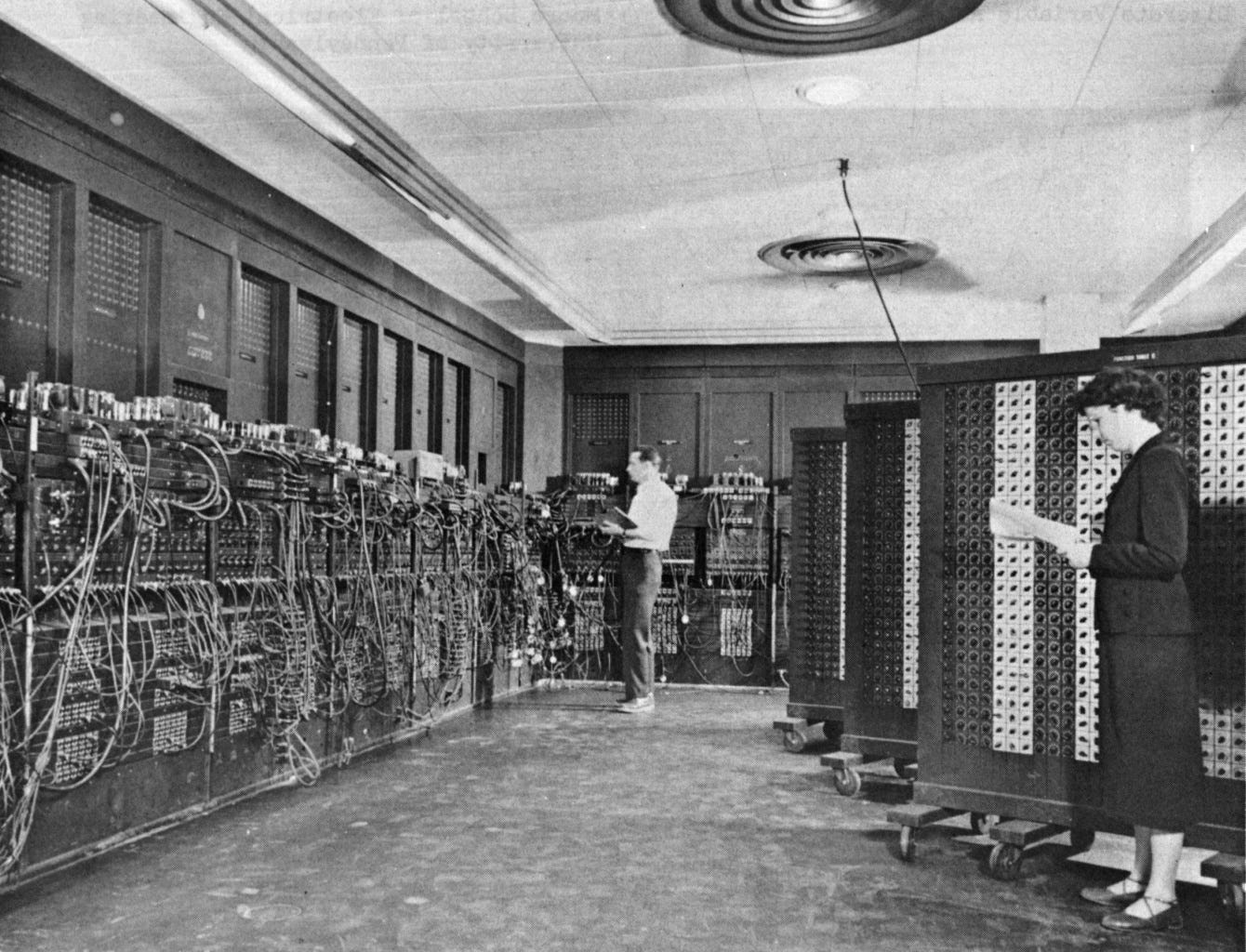

This photo can start to give you an idea of just how big ENIAC was, but allow me to elucidate further. ENIAC’s parts included:

18000 vacuum tubes

1500 relays

7200 crystal diodes

10000 capacitors

70000 resistors

Those components required more than 5 million solder joints. This machine weighed more than 27 tons and occupied roughly 1800 square feet. To operate, the ENIAC used about 150 kilowatts of electricity. All of this power and space and electrical equipment gave ENIAC about 500 FLOPS, but it could be wired for parallel execution and as such the total computational power was likely much higher than that. ENIAC represents a breakthrough for electronic computers, but it still lacked stored programs. While it received input via punch cards and printed its results, many programs would still require wiring changes making the programming task a mixture of rewiring and punch cards (at least depending upon the year, researching yields multiple slightly different methods of operation as ENIAC was in use for nearly a decade and did receive upgrades over time).

UNIVAC, first made in 1951, would be the first commercial computer with every feature we expect: electronic, programmable, digital, stored-program, general-purpose computer.

UNIVAC was an improvement over ENIAC in every single way. It was smaller, more powerful, easier to use, slightly better on the electric bill, and cheaper to build. Thing is, UNIVAC still wasn’t a microcomputer. This was still a machine whose logic was made of many discreet components. This was also still a punch card monster and would remain so well into the 1970s.

Yet, the basic methods of construction and operation of computers were now settled. From the 1950s to the middle 1970s, descendants of UNIVAC and competitors to UNIVAC made by IBM, Burroughs, NCR, Control Data, Honeywell, General Electric, Digital Equipment Corporation, and RCA dominated the computing landscape. These ranged from the computers in the large which were called mainframe computers to more modest minicomputers like the PDP8. The vacuum tubes gave way to discreet transistors and then to what we today would think of as simple integrated circuits starting in late ‘59 and 1960.

It is in the 1970s that things got really interesting. In 1970, a better storage medium was invented, the floppy disk. This changed the game quite a bit. Now, it was practical to read, write, and store data without a card punch. Cards were still dominant for most computing due to cost, but the future was bright. Then, something seriously huge happened in 1971. The first microprocessor was invented, and it is from the microprocessor that microcomputers gain their name. We no longer use the term microcomputer. Today, we have supercomputers and computers. In the 1970s and 1980s, however, there were mainframes, minicomputers, and microcomputers. This is because early microprocessors while cheap were not very powerful.

In the 1970s and into the 1980s, a large and wealthy organization might have a mainframe with many terminals connected to it, or a minicomputer with several terminals. Those terminals are what actually kicked off the microcomputer revolution.

In 1969, a Japanese calculator manufacturer Busicom approached Intel with a design for some chips. Federico Faggin and Masatoshi Shima then simplified the design based on ideas from Marcian Hoff. The thought was to make a general purpose microprocessor. The first Intel 4004 was delivered in 1971. Intel was then approached by CTC to make a processor for their Datapoint 2200 terminal. This processor was the 8008. While the 8008 was more powerful and more successful in the market than the 4004, it didn’t actually end up being used by CTC. The 8008 was just not performant enough for the Datapoint 2200 and CTC chose to use a more conventional TTL-based processor design.

In 1974, Intel released the 8080, which was another Faggin-Shima creation. It was 8 bit. The 8080 was semi-source-compatible with the 8008 and automated source translation tools were available for it. The 8080 wasn’t close to the power of the minicomputers or mainframes of its day. It was a toy in comparison to those machines, but it was powerful enough to be useful. The Altair 8800 with Microsoft BASIC proved that a microcomputer could be an essential tool for a small business.

The Motorola 6800 was also made in 1974, but it was a more complex and therefore a more expensive processor. The cost made it a bit less common than the 8080. It was, however, used in the first Apple I computer design, but the 6502 was chosen instead on cost basis.

In 1974, a group of chip designers and developers from Motorola, with Chuck Peddle leading them, set out to design a cheaper 6800. Specifically, according to Bill Mensch, they wanted to make a set of chips that could sell for $20 and compete against the Intel 4040. As a result of their efforts, in 1975, MOS Technology released the 6502. This CPU was so much less expensive than its competitors that it was featured in many different computers over time. The biggest names using the 6502 in the 70s were Apple and Commodore. In the 80s, the 6502 was used by Acorn, BBC, Apple, Commodore, Oric, Nintendo, and Atari.

In 1974, Federico Faggin started Zilog and in 1976 Zilog released the z80. This chip was compatible with the 8080 but was much faster. This chip was used in countless CP/M machines. The big names for this one were Tandy, Heathkit, Osborne, Kaypro, Epson, Sinclair, Multitech/Acer, Toshiba, Sharp, and SEGA.

With the 8080, the Zilog z80, and the MOS 6502, the 70s/80s home micro scene was launched. Things moved very quickly. It is with these chips that the Altair 8800, the TRS-80, the Commodore 64, the Sinclair Spectrum, and the Apple II were built. The 8 bit machines were all less than powerful. Again, from the perspective of IBM and the BUNCH these were toys. In the 70s, anyone using a 8 bit micro would have been a hobbyist, a tinkerer, a nerd (I say this affectionately as someone who considers himself a nerd), or a small business owner with a definite need for such a machine. More technically savvy accountants and mathematicians may also have used such a machine, but this wasn’t a very large market. The S100 machines with the Intel 8080, kits from many different vendors, completely homebuilt machines, and mixtures of the three were all around. It was a hobbyist’s, an enthusiast’s, and a tinkerer’s time. In the earliest part of the 80s, this continued with one very large exception: games. This somewhat cemented the impression that the early home microcomputers weren’t serious machines, but it did get machines into homes despite any such perceptions. The TRS-80 line, the Commodore machines, the Sinclair machines, and the Atari machines became gaming machines. Perhaps a little surreptitiously, these machines were teaching people about programming as they all ran BASIC; they were teaching people about the capabilities of computers. In the case of Sinclair, this educational aspect was totally intentional.

In 1979, Intel released the 8088. This was a 16 bit chip that had an 8 bit data bus allowing for less expensive expansion. The 8088 and 8086 (the 8086 is an 8088 with a 16 bit bus) did see use in hobbyist S100 bus computers, but the big change for the entire microcomputer market came in August of 1981 from IBM. This was the big one. IBM released the PC. Microcomputers were suddenly a very serious and a very big market. The toy image started to fade. The thought that no one would need a computer at home also started to fade.

Throughout the 1970s, IBM had made several small computers as internal projects and a few with limited releases. Drawing on that knowledge and experience, but realizing that there were problems in IBM’s culture and procedures that would prevent Big Blue from releasing a successful microcomputer, Bill Lowe convinced management to do something quite different in the Boca Raton lab. Within a single year, Bill Lowe had designed a machine using off-the-shelf equipment with a single proprietary chip, the PC BIOS. This was the IBM 5150, the IBM PC.

The IBM PC wasn’t a games machine. It had a very poor display adapter (if you didn’t purchase a better video card), a terrible speaker, and the best darn keyboard ever made. Yet, IBM wasn’t completely deaf to the sound of the market they were entering. The choice of the 8088 was intentional for cost of the unit and for the cost of expansion. The PC also shipped with support for cassettes and TV video. These were all typical of the market in which the PC was meant to compete. The IBM PC was neither the most powerful nor the most affordable machine at the time, but it was the IBM machine. Big Blue dominated the computer market for businesses and governments, and the IBM brand was strong. This made the PC sell quite well.

A side-effect of the PC doing well was that IBM’s partners did well too. Intel and Microsoft became the market leaders that they are thanks to IBM’s PC. One year after launch, over 700 software titles existed for the PC, and the first IBM PC clones were released to market. IBM’s brand was so strong that despite the PC not being the best it became the standard, and it did so immediately.

Of course, the competition did not immediately become solely PC clones. The cost of the IBM PC was far higher than the other early home micros, and as such those machines continued. Those lower priced home machines continued to improve as well. Wonderful machines like the Amiga, the Atari ST, and the Apple IIe/IIc/etc were made. The Lisa and the Macintosh were also released to market and those competed in the high end. Despite the consistent and good competition, IBM and compatibles remained dominant through the 80s and up to the present day.

In October of 1985, Intel released the 386. The 386 was a significant 32 bit upgrade to Intel’s line of 16 bit 8086 compatible processors. It was, in fact, so powerful an upgrade that the industry began changing quickly. The 386 was no toy machine. It could compete with mainframe machines of just 10 years before quite solidly, and this at a fraction of the cost. Minicomputers began to rapidly disappear following the appearance of the Intel 386 and the Motorola 68020. These chips could compete with then current low-end to midrange minicomputers, and again these at a fraction of the cost. The TTL logic of mainframes and minicomputers were also fully replaced by ICs and microprocessors over the course of the late 70s and through the 80s. The differentiation between the three classes became merely one of scale. Finally, the microcomputer was no laughing matter.

Up to this point, systems like Coherent did allow UNIX to run on rather modest hardware, but there were limitations. Microcomputers being so meager on resources really needed operating systems that wouldn’t impact performance and this is why PC-DOS and MS-DOS were the operating systems for the PC and compatibles despite more sophisticated systems existing. Booting to BASIC on other home micros was done for largely the same reason. It also really helped that either DOS or BASIC were pre-installed on the home micros. With the 386, it was no longer that crazy to use a more sophisticated operating system. The 386 meant that a better Windows could be made, and a better Windows was made. Windows 3.1 brought protected mode to Windows starting with the Intel 286, and then 3.11 brought a 32 bit networking stack to Windows. Windows 95 was released and brought significant changes to the user experience on PCs. Windows NT was a fully 32 bit operating system for the PC released in 1993, and it was architecturally similar to VMS. From IBM, there was OS/2. OS/2 was an amazing operating system running initially on the IBM PS/2, and later on many more machines from Big Blue. It was similar to UNIX and to Windows NT, and could run both DOS and Windows programs. While initially 16 bit, OS/2 did become a fully 32 bit operating system.

Linux was also made for the 386 in 1991. With the introduction of the internet in the 90s, Linux would explode as a server operating system. No longer were microcomputers using disk operating systems; now they could run the same software that mainframes did. There was a time during the Dot Com bubble of the 1990s that just mentioning Linux could increase a stock price. Linux quickly became a major server operating system, a mainframe operating system, and then became the supercomputer operating system. Linux was everywhere rather quickly. IBM offered its first Linux powered mainframe in 1998. Oddly, it didn’t penetrate the consumer desktop space all that well despite that being its origin.

The internet was really the innovation that changed the market. While more powerful and user friendly machines grew the market, the internet gave non-technical people a true reason to purchase a computer for their homes. Computers were now becoming truly mainstream, and not solely the province of enthusiasts.

Subsequent Intel chips improved on the 32 bit designs, but competitors still remained. There were quite amazing microprocessors released in the 80s and throughout the 90s: the MIPS in 1983, ARM2 in 1987, the Motorola 68030 also in 1987, the POWER1 in 1990, the DEC Alpha in 1992, the SPARC in 1992, and many more. It was a wild time where innovation was constant and relentless. Costs kept most of the more exotic architectures confined to large machines and workstations, but ARM and Motorola chips did show up in consumer devices. Then in 2003, AMD released the Athlon 64 on the K8 microarchitecture and the consumer 64 bit era began. AMD64 has been dominant from the mid 2000s to now which makes it the longest era in microcomputers so far. The fact that it’s still x86 compatible is remarkable as this means that a modern Ryzen Threadripper is still compatible with an 8086 from 1978 (mostly). Further, considering that automatic translation systems were available some compatibility with the 8080 and then also the 8008 is still (theoretically) possible.

Modern mainframe computers are still offered by IBM, and these still have use cases that are sensible. They have fallen in price, and now are roughly the size of a refrigerator or two. Cloud platforms now unify entire datacenters into a single unit and with clients running on handheld microcomputers, this oddly looks quite similar to the mainframe and terminal arrangement of 70s and 80s. Supercomputers have always been a subset of the mainframe computer, and those are still with us as well.

Today, we no longer say “microcomputer” but instead refer to just “computers.” More commonly, a technologist may refer to server, workstation, desktop, laptop, handheld, or embedded computers to differentiate use-case, compute power, storage capacity, and form factor, but these are all just microcomputers. We don’t call them microcomputers. We don’t even refer to smartphones as computers at all. The microcomputer won and its ubiquity made any distinction somewhat pointless.

Lived through this entire era, remember all the shifts & turns. Started with S-100 computer with 8080, TRS-80 with Z-80, Atari 800 with 6502, Radio Shack Color Computer with 6809, finally IBM PC. Worked at Bell Labs, learned Unix & C, programmed 68000 system for network design. Hired in Switzerland where replaced DEC PDP-11 microcomputer (yes we called them that) systems with IBM PC/AT’s. Was an exciting time, loved small computers & believed everyone should own one, which they do now 🙂

“It was, however, used in the first Apple I computers (later Apple Is were made with the 6502).”

It was used in early paper designs, but I can find no evidence it was ever put into a motherboard, so “made” is a stretch here.

“and the best darn keyboard ever made”

Typo: should be “loudest”.