Intel the CPU company

Making the Micros Mighty

This is the fourth entry in a multipart series. You may be interested to read about Shockley Semiconductor, Fairchild Semiconductor, the start of Intel, and the rise of the 8086.

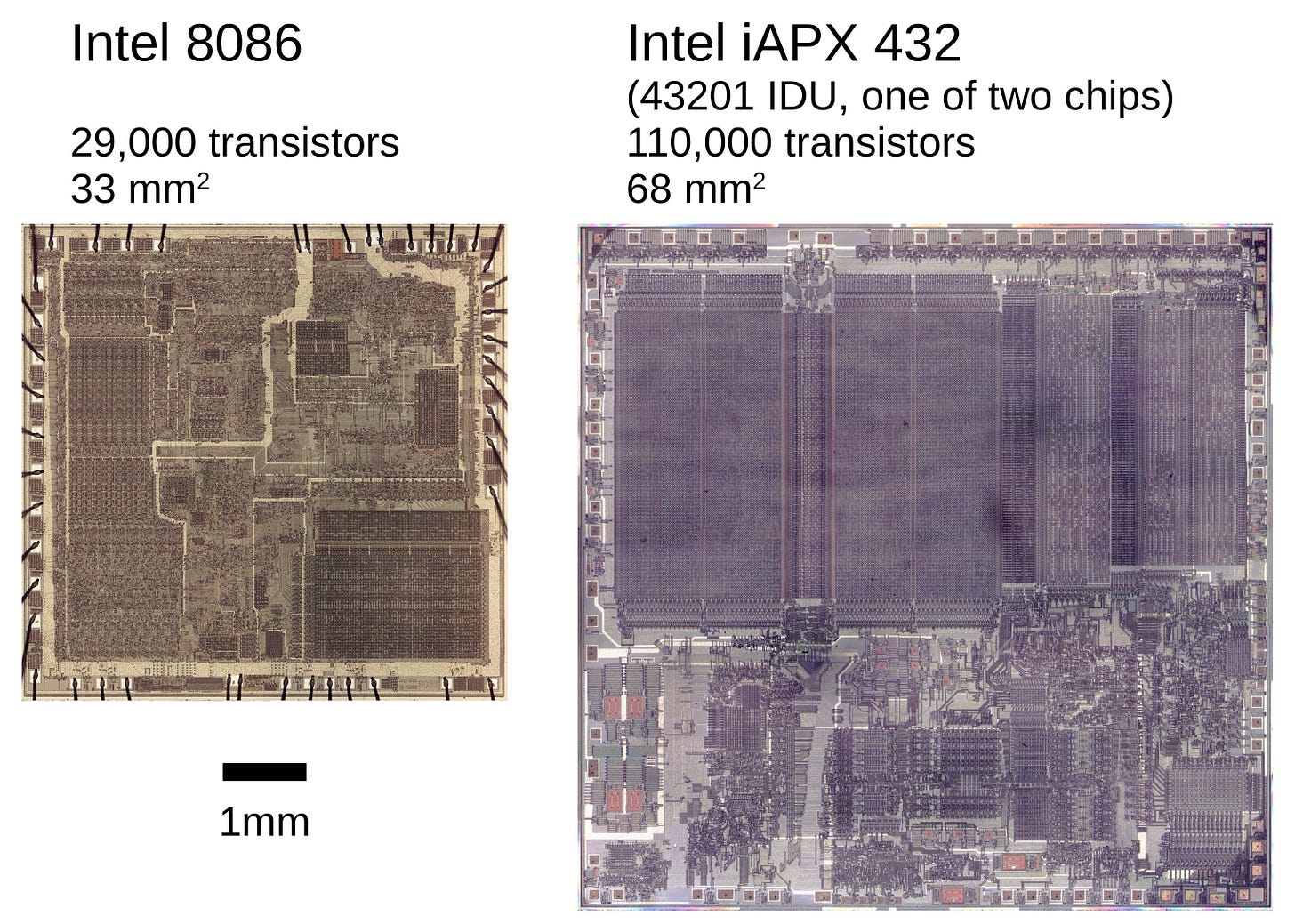

At the start of the 1980s, Intel was in the absolute best position possible for a microprocessor manufacturer. They’d won IBM’s business with 8088, and they had around another 5000 customers for the 8086/8088. While the company was undoubtedly enjoying this success, they did have one failure. At the end of 1981, the company released what they’d intended to be the future of the microprocessor business, the iAPX 432. This was the first attempt to implement object orientation in silicon, was Intel’s first attempt at a 32bit CPU, and Intel did far more than just those two things. The 432 additionally moved process scheduling, interprocess communication, garbage collection, and storage allocation into hardware. At the hardware level, the CPU (here called the General Data Processor, or GDP) was built of two 64-pin VLSI chips. There was a third chip, the Interface Processor, which handled communication and data transfer for I/O. This Interface Processor connected the GDP to the Intel Multibus, and there could be multiple of these busses each controlled by an Intel 8086. That 8086 connected to memory and some I/O devices. This setup meant that all input and output was actually being handled by the 8086 that in this context was called an Attached Processor.

At the software level, the initial design for the 432 had it using ALGOL 68 as its assembly language, but by the time of release this had been changed to Ada. The 432 supported two types of instructions, scalar and object. The scalar instructions included move and store operators, boolean, binary and floating point arithmetic, and comparators. All scalar instructions operated on 8bit characters, 16bit and 32bit integers (signed or unsigned), and 32bit, 64bit, and 80bit floats. The architecture was stack based with operands fetched from the stack and results pushed back, and it lacked general purpose registers.

In this design, ALL system resources were represented as objects. So, for example, the state of the GDPs and IPs in a 432 system were represented by processor objects. These processor objects then had a series of child objects that that were representations of processes to be executed. The addressing of objects was then handled via access descriptors, or ADs. There were objects for scope, type, storage, ports, and even instructions.

If this is all sounding complicated and over-engineered, that’s because it is, and when looking at the block diagrams in Intel’s patents around the 432, things get far more complicated. All of this immense complexity resulted in a system that was around a quarter of the speed of the 8086 in general use. As for why the performance was so abysmal, one account providing a plausible explanation stated it was the switch to Ada. This happened so late in development, that the compiler team had little time to develop tooling, and the software team had even less time to develop the iMAX 432 operating system. All of this resulted in many common operations requiring more than a hundred cycles. Given poor performance and the cost of an Ada compiler being more than car at the time, there was essentially no market for the 432, and Intel wasted around $100 million. Of course, the side effect was that the world got the 8086.

With a major win and a major loss, Intel marched into 1982 as the the largest manufacturer of metal oxide semiconductor ICs on Earth. The company had facilities in the San Francisco Bay area, Portland, Phoenix, Austin, Albuquerque, Puerto Rico, Malaysia, Philippines, Japan, Barbados, Israel, Belgium, and the UK. Intel’s sales offices could be found in 27 countries. The 2164A DRAM of 64K started volume production in 1982, and Intel released the 27128 EPROM that provided 128K, the Intel Personal Development System, the 8096 microcontroller, the 80150 (CP/M on a chip), and the Intel 80186/80188.

The 80186 was was a 16bit microprocessor with a 20bit address bus. Like the 8086 and 8088, the 80188 was the roughly the same CPU as the 80186 but with an 8bit data bus. The initial chips were clocked at 6MHz and compared to an 8086 at the same speed, the 80186 was slightly faster due to microcode improvements. The instruction set for the 80186 was slightly expanded over that of its predecessor, and those extensions were found in later x86 chips as well as the NEC x86 chips. Unlike the 8086, the 80186 integrated the clock generator, interrupt controller, timers, wait state generator, and a few other components. It did not, however, include memory. So, for those wishing to use the 80186 as a microcontroller, they’d still require a ROM and RAM.

Work on the Intel 80286 (or iAPX 286) had started in 1978, and the CPU was brought to the world on the 1st of February in 1982. This was a 16bit CPU with 24bit addressing allowing it to support up to 16MB of RAM. The first of these to come out of Intel could be found at clocks of 5MHz, 6MHz, or 8MHz. Later models could be clocked up to 25MHz. The 80286 was built of 120,000 transistors (later models used 134,000 transistors) on a 1.5 micron HMOS process. It came in a 68-pin PGA, but other packaging options were available. Depending upon the clock rate, the processor could achieve from around 900,000 instructions per second to up to 2.66 million instructions per second. In most cases, the 80286 was roughly double the performance of the 8086 at the same clock. The chip included the new instructions from the 80186, but it added more for its new protected virtual address mode. The protected-mode was meant to enable the use of advanced multitasking and multiuser operating systems, and thus provide Intel entry into the server and workstation markets. For this, the chip provided different segments for data, code, and stack and prevented the overlapping of these segments. Each segment then had a privilege level assigned to it where a segment of a lower privilege level cannot access segments of a higher privilege level. These advancements were not commonly used by most who owned a 286, as the chip couldn’t switch back to real-mode from protected-mode efficiently. The most common way this switch was completed on a 286 was the method employed by IBM’s OS/2: triple fault the CPU, trigger a shutdown cycle, have the motherboard reset the CPU, and have BIOS skip post and jump to a specified memory address immediately following the return of CPU execution. This was slow, and OS/2 didn’t launch to the public until December of 1987 by which time, Intel had shipped 5 million 286 CPUs. This was sped up in later models with microcode changes (hence the later increase in transistor count) made by Intel while working with Digital Research. This is Intel, so the 80286 was accompanied by a suite of support chips, but unlike the company’s prior CPUs, the 286 had an integrated memory controller. Beyond OS/2 already mentioned, the 286 saw ports of Concurrent DOS, Windows, XENIX, Coherent, and Minix. The majority of 286 owners, of course, would have been running PC-DOS or MS-DOS.

Intel closed 1982 with $30,046,000 in income on $899,812,000 in revenues. As seen nearly every single year of Intel’s life up to this point, the company had been heavily investing in expansion. Intel’s rapid growth meant that the company could outproduce their competitors, and this brought them a 72% market share in the 16bit microprocessor market in 1983.

In 1983, the company released new microcontrollers, the 186 and 286 were in volume production, more than 60 new microcomputers were released featuring the 8086/8088, and while the company was no longer the king of the memory market, they were still strong. The company released a 256K EPROM, a 4K NVRAM, and the Intellec Series IV development computers. Intel crossed the billion dollar mark closing the year with revenues of $1,121,943,000 yielding income of $116,111,000.

In 1984, Intel dramatically increased their R&D spend to $180 million (about 11% of revenues), they introduced 70 new products, tripled CPU production every quarter, and introduced a new CHMOS process. Among those new products were CHMOS DRAMs, EPROMs, network controllers, the MULTIBUS II specification, and the BITBUS serial communication specification for industrial applications. Revenues for 1984 were $1,629,332,000 with income of $198,189,000.

In the year 1985, the semiconductor industry suffered from oversupply and decreased demand. Across the industry, companies had been racing to produce more as no matter how much they sold, people kept desiring more. That condition changed, and memory prices fell sharply. In a reaction to that state of affairs, President and COO Andy Grove and Chairman and CEO Gordon Moore announced that Intel was leaving the memory business. Their one remaining investment in that industry was EPROMs. From this point forward, the primary market for Intel was microprocessors. Within that industry, the company would produce CPUs, peripheral chips, microcontrollers that combined CPUs and peripheral chips into one, and development systems that provided the software market with systems to target Intel’s products. Internally, the company then began to emphasize increasing their yields, reducing per unit cost, providing their customers with reliable and on-time delivery, and leading the market in CPU architecture.

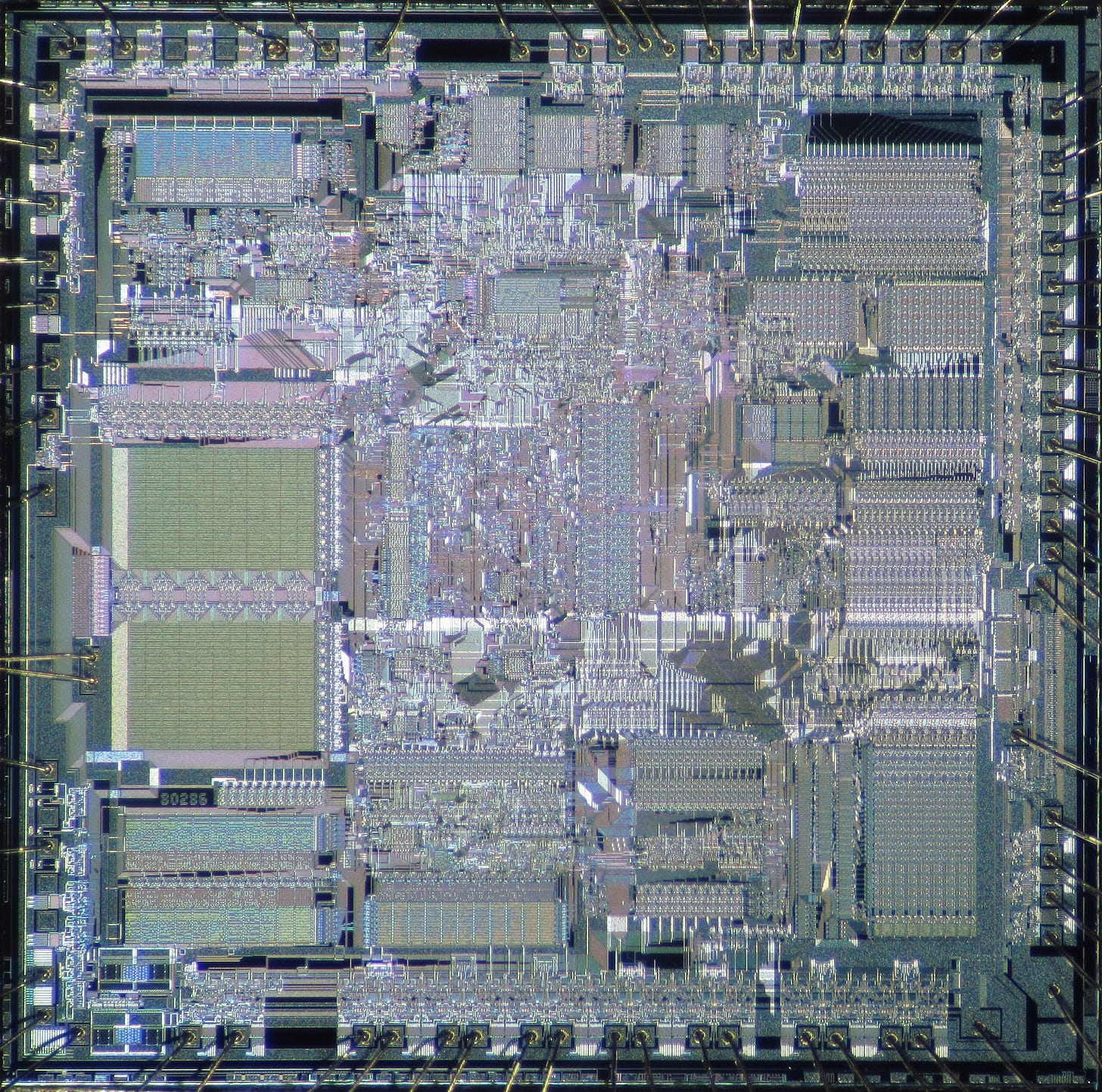

The first CPU from the now decidedly CPU-oriented company was the Intel 80386 (or i386) in mid-October. The development of the 386 had begun as soon as the 80286 had been completed, and that development cycle cost the company nearly as much as the iAPX 432 had. The architecture of the 386 was led by John Crawford, but he didn’t work alone. The 386 team included Jan Prak, Jim Slager, Gene Hill, Chris Krauskopf, Jill Leukhardt, Pat Gelsinger, Greg Blanck, and many more. There were some folks like Les Kohn who, while never assigned to the project, contributed greatly to the design and implementation. This was the first time that Intel made extensive use of automated system design, simulation, CAD, and UNIX tools in the design of a CPU, and it was the first large scale use of CMOS. In all of this, Gelsinger became more important than he might have otherwise as he was Intel’s resident UNIX expert. His role grew overtime in the project, and he led the tapeout team.

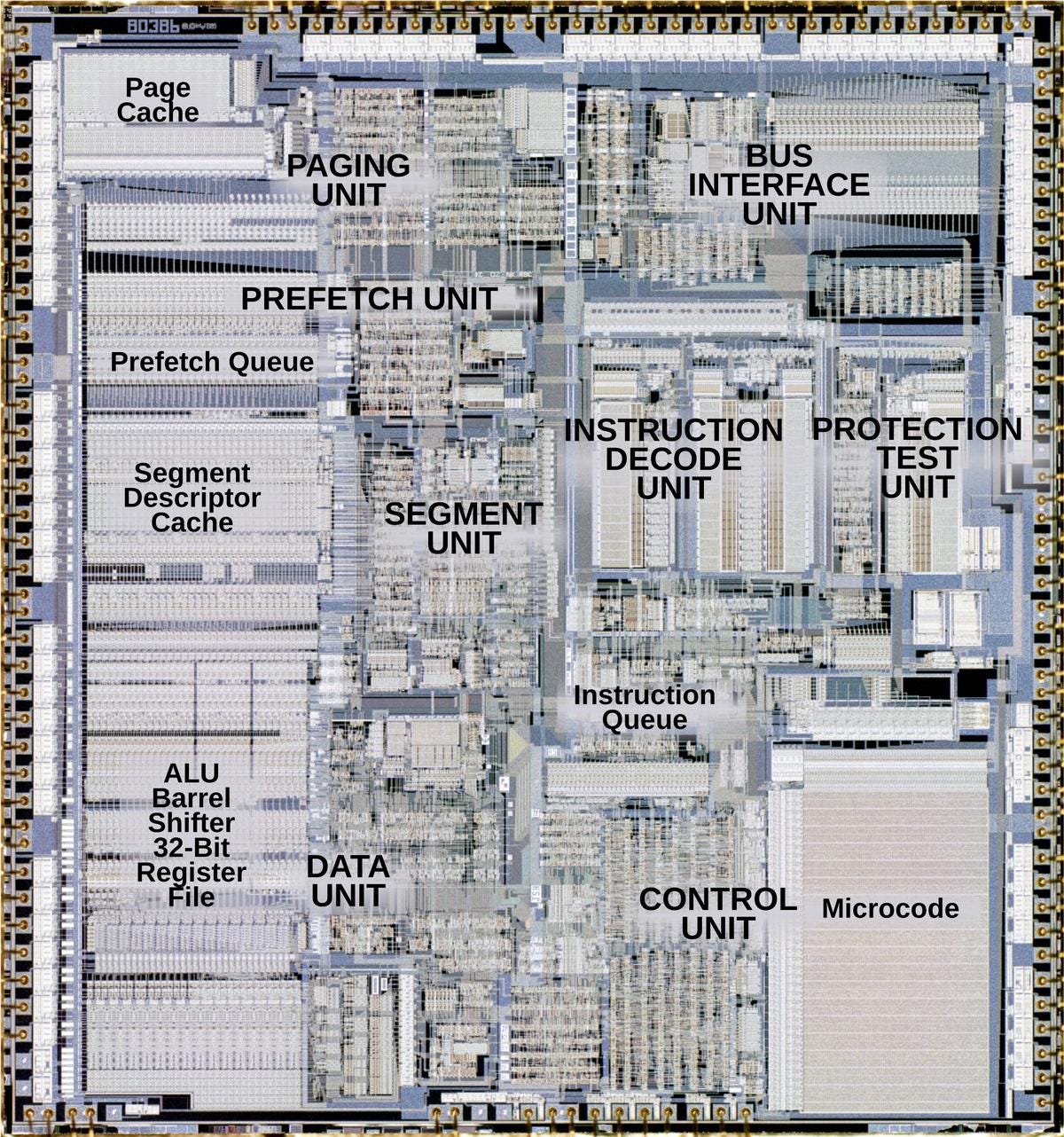

The Intel 80386 was built, at launch, of 275,000 transistors on 1.5 micron CHMOS III process, and clocked at 16MHz, it could achieve performance of around 4 million instructions per second. It was shipped in a 132-pin PGA with more packaging options available depending upon the application. Later models could be clocked from 12.5MHz up to 40MHz, were made on a 1 micron CHMOS IV process, and could have up to 855,000 transistors on chip (SL variant). The 386 was a 32bit processor with a 6 stage instruction pipeline, on-chip MMU, and offered real-mode, protected-mode, and virtual-mode. In protected-mode, the 386 could address 4GB of RAM. If segmentation were used with protected-mode, the 386 could address up to 64TB of virtual memory. A key advantage of the 386 was that to any program (and programmer) the 386 would behave as if it had a flat memory model while in protected-mode despite the hardware using segmentation. The 386 also once again extended the x86 instruction set, added new registers, and greatly improved backward compatibility. With the 386, the machine could make use of virtual-mode, that is virtual 8086 mode, to run multiple real-mode (usually MS-DOS) programs simultaneously. This processor also introduced a name change. Rather than have a 386 and a 388, Intel opted to refer to the fully 32bit part as the 386DX. A 386 with a 16bit data bus was a 386SX. Another major distinction between the two variants was that the 386SX only had 24 pins connected on the address bus which limited RAM to just 16MB. In practice, most home users at the time wouldn’t even have had 16MB of RAM. The 386 saw tremendous operating system support with Windows, NT, OS/2, Linux, BSD, XENIX, PC-MOS/386, Coherent, LynxOS, BeOS, Integrity, and NetWare. Aside from early 386 workstations, however, most owners of a 386 would still have been running MS-DOS or Windows.

The 386 is easily among the most important CPUs ever made. While it may not have been as powerful as some rivals, it was the chip that allowed commodity microcomputers to really contend with workstations and minicomputers at a far lower price. This was also the enabler of Compaq’s breaking of IBM’s stranglehold on the PC platform, and it allowed Windows to become a default choice for PC-compatibles.

Intel closed 1985 with $1,364,982,000 in revenues and income of $1,570,000. While I am certain that this sharp drop in profit worried many, Intel’s future was brighter than ever. The company had their development systems ready for the 386, and many vendors had already either developed system designs around the 386 or had committed to do so.

I have readers from many of the companies whose history I cover, and many of you were present for time periods I cover. A few of you are mentioned by name in my articles. All corrections to the record are welcome; feel free to leave a comment.