The Itanic Saga

The History of VLIW and Itanium

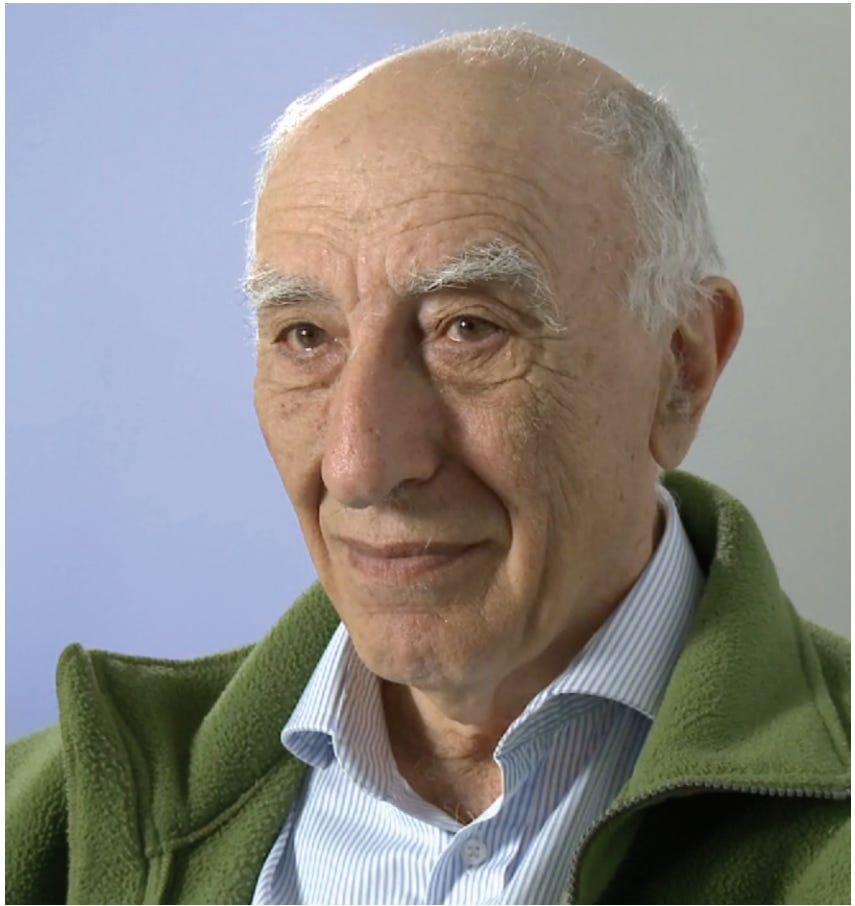

Mikhail Alexanderovich Kartsev was born on the 10th of May in 1923. He was born to a family in the USSR whose erstwhile occupation was pedagogy. It was a nation newly reborn of revolution at the time. He graduated from high school on the 21st of June in 1941 and the Third Reich invaded the USSR the following day. Kartsev, along with a multitude of his countrymen, were drafted into the Red Army. His service to his country began in October of that year with enlistment in a tank regiment. He saw combat in the northern Caucasus, Ukraine, Romania, Hungary, Czechoslovakia, and Austria. Fate was kind to Kartsev, and he survived the war without any grievous physical harm. His enlistment ended in Feburary of 1947 with him having received medals for courage, for the capture of Budapest, the Order of the Red Star, and for victory over Germany.

After the war, Kartsev’s time in the military came to end, and he began studies with the radio faculty of the Moscow Power Engineering Institute. He earned his degree in just three years, and began graduate studies in 1950. This brought him to a rather exciting new project that would set the path of his career, the M-1. This was one of the USSR’s first computers, and it was among the first stored program computers in the world. The project was led by Isaak Bruk and Nikolay Matykhin. Kartsev proved to be both talented and bright, and these qualities earned him a permanent position in Bruk’s laboratory, and a position as the lead on the M-2. The M-2 project was completed in a year and half with a very small team. It wasn’t the most powerful machine in the USSR, but Kartsev said it was a solid computer. The M-1 and M-2 were computers made for civil use cases.

At the end of 1957 or early in 1958, a second laboratory was opened at the Institute of Electronic Control Machines of the USSR Academy of Sciences with Kartsev as the lab’s leader. His first project was the EUM M-4 followed by the M-4M. The M-4M was capable of twenty thousand operations per second. This machine used 23 bit binary numbers with fixed point. Its RAM was 1K of 24 bit numbers and it had a program ROM of 1280 30 bit numbers. I/O control was separated from the main CPU and had its own RAM. I/O had fourteen channels with a combined throughput of six thousand numbers per second. This was a fully transistorized and real-time machine, and it was primarily used by the military for armament controls. For this work, Kartsev earned the USSR State Premium (I understand this to have been one of the highest awards in the USSR).

In 1962, unsatisfied with the performance of the M-4M, Kartsev set about the design of a new computer. The M4-2M was finished by the 14th of March in 1963 (less than a year total of development time). This machine was capable of two hundred twenty thousand operations per second for programs stored in ROM. For programs loaded into RAM, this performance was roughly halved. RAM depended upon configuration ranging from 4K to 16K words of 29 bits. ROM was 4K or 8K depending upon configuration. I/O speeds were slightly higher than the M-4M, but similar in construction. One key of the M4-2M was that arithmetic, logic, and control operations (including matrix multiplication) were executed in a single machine cycle, and this machine was capable of floating point arithmetic. If this wasn’t enough power, the machine could be complimented with an M4-3M peripheral calculator and hardware interface system. RAM could be expanded after installation through the use of magnetic drum. An M4-2M equipped with the 3M was capable of roughly four hundred thousand operations per second.. The M4-2M was in operation at some locations for roughly thirty years with time to failure usually being over one thousand hours.

In 1966 building upon the single-cycle nature of M4-2M’s arithmetic and control, Kartsev designed a system that would be capable of one billion operations per second. To achieve this, the proposed M-9 would utilize a RISC-like architecture that was explicitly parallel. In a single cycle, the machine would be able to execute thirty two instructions. This machine never went into production, but it was the first design of a very long instruction word (VLIW) machine. The primary idea that makes a VLIW system standout from any other is that each instruction word carries multiple instructions. The scheduling of these instructions then becomes the duty of either the language compiler or the assembler (either software or human). The M-9 was not to be, and Sergey Lebedev’s BESM-6 was chosen for those applications where the M-9 would have been useful.

In 1969, Kartsev began work on the M-10. This was an M-9 with vector processors (essentially). This machine was in use by 1973 largely for missile detection and warning systems as well as uses in Soviet space program. Toward the middle of the 1970s, the M-10 saw use in scientific research first with solid matter studies, and later with plasma modelling and collapse. This machine was followed by the M-13 in 1978 which was an improvement on the M-10 in most ways through the use of LSI. The M-13 was… extremely wide with two hundred twenty five parallel execution units. This machine also saw use in the Soviet space program. The work on these VLIW machines were put to paper in Kartsev’s book “Computation systems and synchronous arithmetic.” During his career M. A. Kartsev was awarded the Red Banner of Labor, the Order of Lenin, the Honorable Sigh, the Red Star, the State Premium (as mentioned previously), and numerous medals.

Boris Babayan was born in Baku, Azerbaijan in 1933. He graduated from the Moscow Institute of Physics and Technology in 1957. He was awarded the USSR State Premium and the Order of Lenin for his work on Elbrus 2 at the Lebedev Institute. The Elbrus 1 (developed and built in 1972 - 1978) and 2 (developed and built in 1980 - 1985) computers were essentially the 1965 BESM-6 RISC design that were superscalar, out-of-order machines in LSI with each CPU being comprised of several discrete chips. These machines added type safety with high level language support in hardware, and failsafe capabilities. Babayan began working on a successor to these during the middle 1980s. This was the Elbrus 3. It was VLIW machine building on Kartsev’s ideas. This dropped the high level language support of Elbrus 1 and 2, and it went wide with sixteen execution units. The first Elbrus 3 chips were fabricated in 1991. According to Babayan, one major limitation of the Elbrus 3 was that Russia’s VLSI technologies weren’t great at the time.

In the early summer of 1990, Mikhail Gorbachev and eleven Soviet computer scientists visited Silicon Valley. They were looking to establish some joint ventures with American companies, and to generally engage in some sharing of technology and ideas while building some goodwill. Gorbachev then invited some of the people with whom the Soviet team had met to make a visit to Russia. Thurman John Rodgers and Roger D. Ross among others made that visit. At a meeting in Moscow, Babayan gave a presentation on the Elbrus 3. Upon returning to the USA, Rodgers published a paper on the Elbrus 3. Bill Joy read this paper, and in late 1990, Bill Joy flew to Moscow to meet Babayan. They were consuming large amounts of food and vodka at a restaurant and the talked for several hours.

The next group to arrive were representatives from both Sun Microsystems (with Joy returning) and Hewlett Packard. HP was mostly interested in gaining information, while Sun, and in particular Dave Ditzel (by Bill Joys direction), were willing to fund further work on the Elbrus 3 design and implementation. This led to the creation of the Moscow Center for SPARC Technology while other parts of the former Soviet computer world were collapsing. The work between Sun and MCST went on for several years.

HP had hired Josh Fisher in 1989. Josh Fisher was born on the 22nd of July in 1946 in the Bronx. He earned his BA in mathematics from NYU, and then his PhD in 1979. For his thesis, he created the trace scheduling compiler algorithm, coined the terms instruction level parallelism and VLIW. Fisher had no knowledge of Kartsev’s work at the time as the Soviet Union wasn’t going to release any information on military systems. HP called their VLIW implementation based upon Fisher’s work EPIC (explicitly parallel instruction computing), and it was intended to be a successor to PA-RISC with the initial code name for the chip being PA-WideWord. Intel had launched the i860 in 1989 which was a VLIW chip also based upon Fisher’s work, but that chip was failing in the market. This failure was largely due to the complexity of compilers required to accurately predict the runtime path of any given instruction through the chip. As compilers improved, so did the i860. The issue was that by the time the i860’s performance was really acceptable traditional RISC machines had already taken the market and were far easier to develop for while also being cheaper. This contrasts very strongly with Elbrus of which Babayan said:

When western computers arrived, those young people came up to me and said that it was impossible to debug all this in western computers. Elbrus is very good for debugging. The productivity of software designing increased by ten times, we had ten times shorter debugging time, because of type safety. And trustfulness, because if the program has no interrupt, everything is correct. Plus failsafe capability, very strong trustfulness, no program mistake, no hardware fail. Unfortunately, after this economic changes, it was impossible to continue work on security. I tried to do this in Intel, too, but it was not easy to introduce, though it is very, very, very advanced technology.

The contact between east and west triggered a rapid change of direction for many western companies. According to Babayan, Elbrus 3 was far ahead of western designs. This is somewhat verified by what happened after these companies saw the thing as all these companies wanted it. Sun immediately started a VLIW project, and HP and Intel teamed up to work on a new 64 bit VLIW design. For HP, producing their own chips wasn’t a great idea. They wouldn’t have the kind of volume to make the enterprise economical, but Intel would. For Intel, sharing the cost of development was an obvious win, and so it was that PA-RISC and i860 would have a shared successor. This partnership was formalized in 1994, with John Crawford stating “when we saw WideWord, we saw a lot of things we had only been looking at doing, already in their fully glory.” For Sun, however, their VLIW project was abandoned. David Ditzel left Sun and founded Transmeta along with Bob Cmelik, Colin Hunter, Ed Kelly, Doug Laird, Malcolm Wing and Greg Zyner in 1995. Their new company was focused on VLIW chips, but that company is a story for another day.

In the west at this time, things were getting extremely competitive. Alpha, PowerPC, SPARC, MIPS, and 32 bit x86 were all battling for space in workstations and datacenters. There was some emerging thought that RISC had shortcomings in the area of parallelism and dynamic instruction scheduling. Some were also worried that clock speeds would fail to increase. Pipelining (and more importantly superscalar designs {multiple pipelines}), speculative execution, branch prediction, and out-of-order execution were the way this was addressed in some designs of the day. The problem with these approaches is that if the branch prediction is incorrect, the entire pipeline(s) is irrelevant and must be flushed. The deeper the pipeline, the worse the penalty in performance. With VLIW/EPIC, this complexity is moved to the compiler with the compiler telling the CPU what can be run simultaneously. This does result in some design simplicity around pipelining and the like, but the software ecosystem then grows enormously more complex. For the Elbrus team, this wasn’t as big a problem as the software and hardware were all designed in-house with software being tailored to the hardware. The use cases for the Elbrus CPU were also extremely well known to the designers, and could therefore be easily optimized.

The Intel and HP collaboration became known as Merced, and it was met with many delays. These delays didn’t stop the hypetrain. Most of DEC’s assets were sold to Compaq in 1998, and Compaq chose to phase out Alpha in favor of Merced. SGI spun off MIPS as its own company as they too chose to focus on Merced. HP was obviously going to end PA-RISC in favor of Merced. The number of competitors had quickly reduced.

Unlike the Elbrus team, the companies involved in Merced needed software that was compatible with the current industry standards. To this end, IBM, SCO, Sequent, and Intel launched Project Monterey which was announced on the 26th of October in 1998. The hope was that they’d build a single UNIX operating system that could run across multiple architectures. IBM was contributing POWER ISA support, SCO was providing 32 bit x86 support, Sequent provided multi-processing support, and Intel provided the Merced architecture information. Much of this integration work and Merced software development was actually being done by SCO. Within five months, SCO UNIX (essentially XENIX) was running on Merced with testing being completed and announced by the 8th of April in 1999. In a period spanning about the next seven months, SCO had incorporated technologies from the other players into their x86 offerings, and they’d made it possible to run x86 binaries on Merced. However, in May of 1999, IBM and Intel along with Caldera, CERN, Cygnus, HP, Red Hat, SGI, SusE, TurboLinux, and VA Linux Systems launched the Trillian Project which focused on porting Linux to Merced. This project was complete on the 2nd of February in 2000. That IBM turned to Linux and Project Monterey died likely contributed to Caldera’s success and SCO’s demise. Caldera bought SCO, and the UNIX port went no where. It did, however, result in some litigation later on.

After three years of delays, Merced shipped as Itanium on the 29th of May in 2001. The first OEM systems from HP, IBM, and Dell were shipped in June. Itanium, whose architecture was now referred to as IA-64, was a 6-wide VLIW chip running at either 733 to 800 MHz with a 266 MT/s front side bus. It had 16K of L1 cache, 96K of L2 cache, 2 or 4 MB of L3 cache, and it was a single core chip on socket PAC418 built on a 180nm process. That this chip under performed is an understatement. Given the long development time, multi-billion-dollar development cost, significant hype, and claims that it would out-compete everything on the market… Itanium was a massive failure. The 32 bit x86 chips of the time were able to best it in most workloads. Embarrassingly, the Pentium 4 (whose own performance wasn’t that good) beat Itanium on integer performance and memory bandwidth. Those areas where the chip was strong were in transaction processing and scientific applications. John Crawford, Merced project leader at Intel, reflects: “Everything was crazy. We were taking risks everywhere. Everything was new. When you do that, you're going to stumble.” The five hundred person team working on the chip was also relatively inexperienced, and disagreements between HP and Intel led to many compromises in design.

Those strong disagreements between HP and Intel led HP to start work on Merced’s successor, McKinley, as early as 1996. The team working on the successor chip was led by HP instead of Intel, and tape out was completed in December of 2000 before the first Merced chips shipped. Many of Merced’s shortcomings were addressed with McKinley by reducing memory latency and increasing memory bandwidth, increasing the L2 cache, and excluding floating point from the L1 cache. The L3 cache was moved on-die as well which increased its bus bandwidth and decreased its latency. While McKinley reduced the pipeline depth to eight stages (Merced had ten), this was made up for by increasing the clock speed, bus speed, and caches. McKinley’s launch name was Itanium 2 and its debut was on the 8th of July in 2002. It was made on a 180nm process with 221 million transistors on chip. The clock speed was 900 MHz, the front side bus speed was 400 MT/s, and it was a single core machine on the PAC611 socket.

Unfortunately for all of the companies involved, these improvements to Itanium came too late. On the 5th of October in 1999, AMD announced their competitor to IA-64. The SledgeHammer microarchitecture (K8, AMD64 ISA) would implement 16 bit, 32 bit, and 64 bit x86 compatibility. On the 10th of August in 2000, AMD released the x86-64 Architecture Programmers Overview. This was the instruction manual for the new architecture. Importantly, releasing the manual for developers ahead of the launch ensured software compatibility in both Linux and Windows. By the time that Itanium was launched, a user of an Itanium system had Windows XP 64-bit Edition, Linux, or HP-UX as potential operating systems. Itanium’s x86 emulation, however, was so bad that any software written for Windows or Linux and compiled for x86 was going to run terribly. Ultimately, this meant that most of the machines sold were running HP’s own HP-UX. AMD64 did not suffer these issues at all. It could natively run any 32 bit x86 operating system and accompanying software.

The AMD Opteron was launched on the 22nd of April in 2003. Rather immediately, it became obvious that the price vs performance of Opteron made it a good choice for a broad market segment. It was competing well against IBM POWER, Alpha, and Itanium 2. It had support from Microsoft (Windows Server 2003), Red Hat, SuSE, Mandrake, and Sun, and IBM even ported DB2 to Linux on Opteron. The immediate response was a revision of Itanium 2 called Madison. This increased the L3 cache to 6M, increased the clock speed to 1.5 GHz, and increased the bus speed to 667 MT/s. Madison was made on a new 130nm process which helped keep the power requirements from creeping too high for the socket. Madison was followed in November of 2004 by Madison 9M which further increased the L3 cache to 9M and increased the clock speed to 1.6 GHz. Critically for scientific computing, Itanium 2 still had floating performance that surpassed Opteron, and Itanium’s cache was much faster.

Intel was hoping for Itanium sales to $28 billion in 2004 (around $45.5 billion in 2024). The company was overly optimistic. Actual sales for Itanium were $1.4 billion (around $2.28 billionin in 2024). While this was a marked improvement over 2003 where Itanium was in the realm of $479 million (around $799 million in 2024), it still wasn’t really enough. HP represented seventy six percent of those sales in 2004, IBM was ten percent, Dell was five percent, SGI was four percent, Fujitsu was one percent, and the remainder were sales by Groupe Bull, NEC, Legend, LangChao, and Unisys. The surprising bit here is that Fujitsu, with one percent of the total Itanium market, sold just two hundred thirty three Itanium systems in 2004 which shows both just how low the sales of Itanium really were, and how high the price tag was. Essentially, with the disappearance of Alpha, MIPS, and PA-RISC, consumers didn’t adopt Itanium but instead turned to IBM POWER, Sun SPARC, and AMD Opteron. HP’s UNIX server sales dropped by ten percent while IBM’s gained thirteen percent. While a bad year for Itanium, Babayan joined Intel in 2004. With Babayan, came the Elbrus E2k which could execute both Elbrus VLIW and x86 machine code, and the team working for Babayan became Intel’s Russian R&D unit. The E2k was capable of executing twenty instructions per clock. While it ran at just 300 MHz, the large parallelism made it competitive against other chips. For software, the E2k could run Linux or a number of real-time operating systems developed for it. The chip was built on a 130nm process of 75.8 million transistors and consumed just six watts of power. At Intel, Babayan became the Director of Architecture for the Software and Solutions Group, and the scientific advisor of the Intel Research & Development center in Moscow. His work focused on compilers, binary translation, and security. He was the second European to become an Intel Fellow.

In January of 2005, HP ported OpenVMS to Itanium and IBM dropped Itanium in favor of the x86-64 Nocona Xeon (NetBurst, EM64T). While IBM was abandoning Itanium, executives from Bull, Fujitsu, Siemens Hitachi, HP, Intel, NEC, SGI, and Unisys met in San Francisco to devise a path to success for the architecture. The result was the announcement of the Itanium Solutions Alliance whose goal was to make Itanium the industry leader in mission critical systems by the end of the decade. The total investment by the group was $10 billion (around $15.72 billion in 2024) to promote the architecture and port software.

On the 18th of July in 2006, Intel launched Itanium 2 9000 (Montecito). This was a dual core, hyperthreaded part built on a 90nm process of 1.72 billion transistors. Cache sizes were increased as were frequencies while power consumption was reduced. Montecito was followed by Montvale which sold as the Itanium 9100 which was largely a series of fixes for issues with Montecito. These were the last chips whose design was led by HP. Intel hired HP’s engineers and purchased HP’s related assets with HP agreeing to continue investment.

From 2001 to 2007, a total of around 184 thousand Itanium systems had been sold and HP continued to be the bulk of those sales at around ninety five percent. By 2008, this was around $4.4 billion (around $6.274 billion in 2024) for HP. This number dropped to $3.5 billion in 2009 (around $5 billion in 2024) and those figures would effectively be cut in half by 2016. In late 2009, Red Hat announced the end of support for Itanium in new products. Red Hat Enterprise Linux 5 would be the last version available. In April of 2010, Microsoft announced that Windows Server 2008 R2, SQL Server 2008 R2, and Visual Studio 2010 would be the last Microsoft products to receive Itanium support. In October of 2010, Intel released new versions of both their C and Fortran compilers for x86-64 and explicitly did not offer versions for Itanium. Oracle’s support for Itanium ended in 2011, and HP really didn’t like this. HP sued Oracle for breach of contract and this was ultimately decided in HP’s favor.

In 2008, Hewlett-Packard paid Intel something in the neighborhood of $440 million (about $627 million in 2024) to continue the development and production of Itanium for 2009 through 2014. Another deal took place in 2010 for around $250 million (about $352 million in 2024) that extended that time frame to 2017. Intel did so, but as its own software platform support shows, it was only due to the HP agreement. HP had HP-UX and OpenVMS with their own tooling, and they represented nearly all Itanium shipments. Just as that last deal stated, the last Itanium chip was the Itanium 9700 which was launched on 11th of May in 2017. The top end part was the 9760 with eight cores, sixteen threads, clocked at 2.66 GHz, and packing 32 MB of cache. By this point, however, even HP seems to have lost interest. They’d begun selling x86-64 servers in product lines that had previously been Itanium in 2015. The last Itanium orders were placed on the 30th of January in 2020 and the last shipments were made on the 29th of July in 2021. Elbrus, on the other hand, is still going with the latest chips being built on a 16nm process, featuring sixteen cores, and capable of using 4TB of eight channel ECC RAM. Some systems can be configured for four CPUs pushing that RAM limit to 16TB. Given that these systems run Elbrus Linux and cannot natively run x86 or ARM software, standard benchmarks aren’t very useful.

Itanium was intended to be a ubiquitous replacement for x86, but failed to compete against AMD64. Primarily, its price was far higher than AMD’s while not offering performance that justified the price offset. For specific workloads, it was a good product and therefore made HP a decent sum. It did better than is commonly thought, and I imagine that had Merced been canceled and McKinley shipped instead, the product may have had a slightly larger market share. Ultimately, Itanium’s failure came down to being inefficient with companies’ prior software investments, being extremely expensive, never quite getting the performance of the chip to its highest possible level since highly optimized compilers never materialized, and having launch timing with Opteron shipping so shortly after. VLIW chips were made by other companies and saw use in the embedded market, the graphics market, and especially as DSPs. For Itanium, however, it just wasn’t to be. While innovative and interesting, the Itanic sank on her maiden voyage.

I now have readers from many of the companies whose history I cover, and many of you were present for time periods I cover. A few of you are mentioned by name in my articles. All corrections to the record are welcome; feel free to leave a comment.