Intel and the New Millennium

Losing the performance crown

This article continues a lengthy series. You may be interested in the start of silicon valley, Fairchild, the founding of Intel, the start of the x86 architecture, Intel’s pivot to become a processor company, the i960 and i486, the Intel Inside campaign, the FDIV bug and the Pentium Pro, and MMX, the Pentium II, and the Pentium III.

Intel had risen from humble beginnings as a memory manufacturer, pivoted to microprocessors as margins on memory fell, and they’d proceeded to dominate the industry. The company had bested all of their competition from the low-end to the high, and they seemed truly unstoppable. Culminating in the P6 microarchitecture and the latest Pentium III, Xeon, and Celeron, the company had a chip for all market segments from simple word processing to workstations.

On the 23rd of June in 1999, AMD released the first Athlon (K7) for Slot A (242-pin connector). Work had begun on Athlon following Compaq’s acquisition of DEC and the cancellation of Alpha. Jerry Sanders had hired many of the Alpha engineers among whom was Dirk Meyer who led the K7 project. Sanders then worked with Motorola to produce chips that made use of copper interconnects (as opposed to aluminum) to reduce propagation delys and power consumption. The first K7s were manufactured on a 250nm CMOS process and clocked from 500MHz to 700MHz. These all had 512K cache, EV6 bus (licensed from DEC), and TDPs ranging from 42W to 50W. Prices ranged from $324 to $849. Additionally, all of these parts supported MMX and Enhanced 3DNow! Models were released later the same year on a 180nm process, 512K L2, clocks up to 750MHz, and TDPs ranging from 31W to 40W. Models released in the first half of 2000 brought the clock up to 1GHz at a TDP of 65W. AMD had taken the performance crown from Intel, and with the help of Motorola, they could meet the market demand.

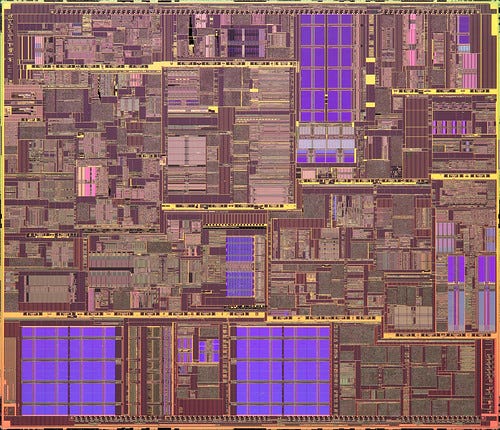

Intel released the Pentium 4 on the 20th of November in 2000 built of 42 million transistors on a 180nm process. These early Pentium 4s featured a TDP of 51.6W, 256K L2, clocked at 1.4GHz or 1.5GHz, and used a 400MHz (effective) FSB. This competitor to the AMD Athlon was based on the new NetBurst microarchitecture, and it utilized the Intel 850 chipset. With the P6 microarchitecture having been such a success, Intel was doubling down on the internal RISC architecture idea. The traditional L1 cache was replaced with an entry trace cache. The idea here was to store the micro-operations (x86 instructions decoded to RISC instructions) bypassing the need to repeat the decoding. Yet, these weren’t cached normally. On NetBurst, these decoded operations were cached in the expected order of operation. The downside naturally being that if the predicted execution order were wrong, the cache would be invalid. The cache wasn’t the only bet on branch prediction either. The original Pentium 4s had a 20-stage instruction pipeline (compared to 12 stages in the Athlon), and in the event of a misprediction, the entire pipeline would be invalid. With such a long pipeline, this severely hurt overall performance. To make matters worse, such a long pipline increased voltage requirements and generated more heat. The high clockspeed also generated more heat. For more on NetBurst, Chester Lam has an excellent write up of the microarchitecture over at Chips and Cheese for those interested. For this article, I will say that when a workload had somewhat predictable branches (like media encoding or benchmarks) this setup worked quite well. If, however, the user were running multiple applications at once, applications with less predicatable branching, or applications of an older vintage then this long pipeline so reliant on accurate branch prediction would result in IPC (instructions per clock) lower than that of the Pentium III that preceeded it.

Small tangent; naming is weird. This would have been the 80786 or i786 in the traditional scheme. Instead, it’s using Pentium derived from penta (or five), and then adding a four to the name. Of course, this was the third microarchitecture for the Pentium series and not the fourth, but it was the fourth product series launch utilizing the name originally intended to replace the 586 name…

The complex design of the chip led to high prices with the first models selling for $644 to $819 in quantities of 1000. Boxed processors were made available with 128MB of RDRAM, and that raises the second important bit on pricing. Not only did these new chips require new boards with new sockets, their chipset, the i850, required new Rambus DRAM which came in 184-pin RIMMs. These offered the advantage of a dual channel design, but at a higher dollar cost than DDR (both were faster than the preceeding PC-133 in most cases). In the most premium of premium systems, Rambus could operate in quad-channel increasing total throughput considerably, but the design was explicitly multi-channel. All open RAM slots in a Rambus system required the presence of CRIMMs (continuity RIMMs) like a sort of SCSI terminator but for RAM. To get any advantage over earlier RAM types, the owner of a Rambus system would need at least two matching RIMMs, increasing costs yet again. This wasn’t the first appearance of Rambus as there had been Pentium III workstations that made use of it, but this was the first time that Intel had attempted to make it the only choice for system makers working with Intel chips.

Putting all of this together resulted in the Pentium 4 being expensive, hot, and slow for most consumers. Still, for those who didn’t mind overpaying, had deep pockets, and had all new software, it may not have been too bad.

AMD hadn’t been the only beneficiary of DEC’s demise. Intel gained StrongARM from DEC in 1997. The first use of StrongARM at Intel was to replace the i860 and i960. In August of 2000, Intel announced the XScale architecture. Intel XScale was largely an implementation of the ARMv5TE ISA, but it omitted hardware floating point. The ARMv5TE was an ARM9E core (16bit) without Jazelle. While many people today are not familiar with XScale, it was a hit. Intel XScale CPUs powered the Blackberry, the Palm Zire, Tungsten, and Treo, the Sharp Zaurus, the Compaq iPaq, the Creative Zen, and the Amazon Kindle.

Other events for Intel in the year 2000 included the introduction of SpeedStep (primitive in comparison to Transmeta LongRun, offering just two modes) in the mobile Pentium III line, shipment of more prototype Itanium chips, strong continued sales of Pentium III Xeons, strong continued sales of flash memory, increases in network controller sales, and the acquisition of roughly a company per month.

Intel had grown wildly over the decade prior, and this resulted in a large increase in the number of products the company was developing, the number of locations the company operated, and the number of divisions within the company. This, in turn, led to delays. This was precisely the opposite of Intel’s prior reputation. The company had failed to meet demand in the first half of the year, Itanium had been severely delayed (around 3 years when it failed to ship in 2000), and the Pentium 4 didn’t live up to expectations. Despite it all, Intel ended the year 2000 with 86100 employees, assets worth $47.9 billion, and $10.5 billion in income on revenues of $33.7 billion.

Despite dire predictions and mountains of hype, the world failed to end and the Sun rose on the 1st of January in 2000. Yet, for many people in the computing industry, I imagine it felt as if their worlds were indeed ending. Starting in March of 2000, the dot com bubble peaked, burst, and a long market decline began. On the 14th of April in 2000 (a Friday), the Nasdaq fell 9% bringing the total drop for that week to 25%. With stocks falling and companies hurting, people began selling their positions to cover taxes. By November, dot com stocks as an aggregate had lost around 75% of their value. These losses continued right on through the New Year.

While the rest of the industry was hurting, Intel was building. As Intel saw it, only 10% of the human population had internet access, and only around 500 million PCs were in use around the globe. The market could still grow. Intel spent $7.3 billion on capital expenditure during 2001. Quite a bit of this was on fabs as the company built out their 130nm capacity and transitioned to 300mm wafers from 200mm wafers. The company also spent $3.8 billion on R&D, and this resulted in Intel producing silicon gates just 20nm wide capable of switching more than a trillion times each second.

On the 21st of May in 2001, Intel released NetBurst-based Xeons clocked at up to 1.7GHz as well as the Intel 860 chipset to accompany them. The chipset supported sockets 603 and 604, up to 4GB of RDRAM in a dual channel configuration with ECC, AGP 4X, and an effective FSB speed of 400MHz.

On the 29th of May in 2001, Intel finally shipped Itanium. This was a 6-wide VLIW microprocessor running at either 733MHz or 800MHz. It had 16K+16K L1, 96K L2, and either 2MB or 4MB L3. Itanium socketed into PAC418, and it was built on a 180nm process. One of the systems with most press was the IBM IntelliStation Z-Pro 6894. While this machine is outwardly boring being largely similar to all IBM desktop hardware of the time, it was far more interesting internally. It featured two Intel Itanium microprocessors, a quad data rate 133MHz FSB, a maximum of 16GB of registered and quad-interleaved ECC SDRAM, IDE and SCSI interfaces, 1Gbps ethernet, a 16MB Matrox or 32MB Nvidia AGP card, and an 800W PSU. For comparison, this launched along side the M-Pro 6850 which featured two Intel P4 Xeons with either 256K or 512K L2, a maximum of 4GB of ECC Rambus RAM, IDE and SCSI, 100Mbps ethernet, 32MB Matrox or 64MB Nvidia or 128MB ATI or 128MB 3Dlabs vida cards, and a 480W PSU. This initial launch of Itanium was extremely bad. In first full quarter of sales, fewer than 500 systems (from all manufacturers) were sold. How much of this was the product and how much was the market is up for debate, but it was bad regardless.

At this point, it was quite clear to Intel that RDRAM wasn’t doing well in the market. This led to the announcement of the Intel 845 chipset in July for sockets 423 and 478. Here, we see the same 100MHz quad data rate bus, but this chipset supported upto 3GB of 133MHz SDRAM or 2GB of DDR. The chipset was similar to the i860 and supported ECC and AGP 4X as well. Yet, when the chipset was officially released in September of 2001, board manufacturers were restricted to SDRAM with DDR boards being forced to wait until 2002. This was a rather odd move given that VIA had already released the P4X266 supporting both DDR and SDRAM, and SiS had already released the 645 chipset supporting DDR 333. While both of these competing chipsets were single channel, they still offered two transfers per clock, and thereby provided lower maximum bandwidth than the i850 but significantly higher bandwidth than the i845 even at their slowest. The i845 had a maximum memory bandwidth of 1.06GB/s, the i850 3.2GB/s, the SiS 645 2.7GB/s, and the P4X266 2.1GB/s. Despite Intel’s rather significant lead with the i850, in real-world usage the differences weren’t too severe. In 3DMark 2001 on Windows 98 SE, the Pentium 4 with the i850 and Rambus scored 4117 while the same chip with the P4X266 and DDR scored 3985. The difference was more pronounced with Windows XP where the i850 scored 3985 and the P4X266 scored 3145. There was some serious oversupply in 2001 so prices had fallen sharply, and by July, 128MB of Rambus cost $146 (around $262 in 2025 dollars), DDR cost $56, and SDRAM cost just $35. At a time when people were hurting for money, the cheaper product was going to win. While Intel had been releasing more SKUs of the Pentium 4 throughout the year, the 845 chipset was the processor’s savior, and the Pentium 4 finally began to outsell the Pentium III. By the end of the year, the Pentium 4 was the best selling CPU on the market.

Intel closed the year with $1.29 billion in income on revenues of $26.5 billion. Staff had reduced to 83400, but Intel wasn’t alone. In the USA, 1,735,000 jobs were lost over the course of the year.

In January of 2002, the Pentium 4 was moved to 130nm and clocks were increased to 2.2GHz. In May, the i845 got a refresh allowing for 533MHz FSB speed, USB2.0 support, and support for hyper threaded CPUs. The mobile equivalent, i845MP, got much of the same treatment but also included enhanced SpeedStep.

In March of 2002, Intel released the Xeon MP. These chips clocked from 1.4GHz to 1.6GHz, had 256K L2, and 512K or 1MB L3. All of these Xeons supported MMX, SSE, SSE2, and hyper threading, and they could be put into dual, quad, or octo-processor configurations. So, at the high-end, one computer in 2002 could have had 8 cores and 16 threads running at 1.6GHz. Despite the specifications, the Xeon MP didn’t have the same success of earlier Xeon chips. Partially, this first outing of hyperthreading carried a price premium while not quite delivering on performance.

Itanium 2 was publicly announced on the 8th of July in 2002. These chips used the PAC611 socket and a 128bit FSB. These were built of 221 million transistors (25 million for logic, the rest for cache) manufactured on a 180nm process. Cache sizes were increased with the L2 being 256K and the L3 being either 1.5MB or 3MB, and the clock speed to either 900MHz or 1GHz. Performance on the second generation Itanium was far better than that of the first, but by this point, Intel’s own Xeons were far more popular. Sales did increase for Itanium, but Intel had shifted to marketing the product exclusively to enterprise customers while keeping workstations and servers for the Xeon.

In November of 2002, Intel released the first hyperthreaded Pentium 4 for socket 478. This part was clocked at 3.06GHz packed 8K L1 data cache, 12K L1 instruction cache, 512K L2, and was frabricated on a 130nm process. This was the first consumer CPU to cross the 3GHz barrier, and it was the first consumer CPU to feature hyperthreading. Importantly, unlike the Xeon MP, hyperthreading really helped this CPU. In most workloads, the Pentium 4 HT was the mainstream x86 performance leader at launch. The Pentium 4 had finally and decisively beaten the K7.

The overall economy wasn’t strong throughout 2002. The Nasdaq had peaked in 2000 with a value of $6.7 trillion, and by October of 2002, it had bottomed out at $1.6 trillion. Intel posted revenues for the year of $26 billion and income of $3.1 billion with 78700 employees.

In 1999, Intel had begun work on a chip codenamed Timna. This was a cost reduced version of the Pentium III intended to power budget PCs. The task of designing this processor was given to Intel’s Haifa team, and they delivered. Unfortunately, one of the cost saving measures was the integration of the memory controller. This would normally be good on both price and performance, but this was a Rambus controller, and Rambus was still the more expensive choice. Given this situation, the presence of the controller meant that Timna never saw the market. The team was then tasked with creating a mobile CPU. They quickly realized that the Pentium 4 wasn’t a suitable basis. NetBurst’s long pipeline would simply require too much power and generate too much heat. Given the team’s most recent project, they knew exactly what was needed here. They began working on a P6-based core. What was finally delivered on the 12th of March in 2003 was one of the greatest Intel chips ever produced. It was neither the most powerful nor the most efficient, but it gave Intel a win at a time when it desperately needed one, and it paved the way to Intel’s future products. This chip, codenamed Banias, became the Pentium M. It was essentially an Intel P6 core (Pentium Pro, II, and III) with a slightly longer pipeline, SSE2, enhanced SpeedStep, PAE, a better branch predictor, and more cache. This core was then given the P4’s 64bit quad data rate bus. It featured a 64K L1 (32K data, 32K instruction), and 1MB of L2. The chip came in 6 variants and each variant had two modes of operation. At the top of the line there was 1.6GHz part at 1.48V with 24.5W TDP. It’s alternate mode was 600MHz at 0.96V. At the bottom in performance was a 900MHz part with an alternate mode of 600MHz at 0.84V. The TDP for this lowest performance part was just 7W. The Pentium M was paired with either the 855PM or 855GM as well as the Intel PRO/Wireless 2100 card (802.11a/b). These three parts together created the Intel Centrino platform. The two chipsets were power-optimized revisions of the i845 supporting USB2.0, 2X ATA, ALC97 2.3, 64bit DDR266, and AGP 4X. The chipsets were so power efficient that they didn’t require cooling hardware of any kind. The parts were sold together with the 1.6GHz package carrying a price of $720, 1.5GHz $506, 1.4GHz $377, 1.3GHz $292, 1.1GHz $345, and 900MHz $324. While those last two parts were slower than the 1.3GHz chip, they were also far better on power hence the price increase. Over 5 million Centrino packages were shipped before the year’s end.

One of the employees AMD had brought over from DEC was Jim Keller. While he’d been instrumental with K7, he led the team for K8. What he and his team created at AMD was a devastating blow to Intel’s hopes for both Itanium and NetBurst. On the 22nd of April in 2003, AMD announced Opteron, and on the 30th of June, it hit the market at $229 for the 140, $438 for the 142, and $699 for the 144. By July, the prices for the 144 and 142 had fallen to $438 and $292 respectively. Here, the world gained a 64bit x86 platform, and it was far lower in cost than Intel’s 64bit offering. Even better, it could use DDR memory. Just as Compaq had once taken the technological lead from IBM, AMD had now taken the teachnological lead from Intel. Opteron, and all AMD64 CPUs, was completely backwards compatible with 32bit and even 16bit x86. The architecture added 8 new 64bit general purpose registers, and extended the rest. It included SSE and SSE2 and added 8 new SSE2 registers. By virtue of being 64bit, Opteron greatly increased the amount of memory the CPU could address, and it moved the memory controller onto the CPU which lowered latency. That memory controller could, however, be disabled and thus allow for the presence of an off-die controller should one be needed. Speaking of memory, the memory bus in Opteron was 144bit (2x 72bit DIMMs [64bit + 8 parity bits for ECC]). With Opteron, AMD also introduced the HyperTransport bus. This was a 16bit wide, point-to-point serial bus used to connect I/O controllers, AGP/PCI bridges, or even CPUs. In the first generation Opteron, there were three HT links offering 3.2GB/s in or out yielding 6.4GB/s total. Any Opteron could connect to another with two HT links and leave one for I/O. The memory system was more scalable with a memory controller per CPU, but as with any NUMA design, some workloads were better than others. The K8 Opteron was built of 105.9 million transistors on a 130nm silicon-on-insulator process. When tested in a variety of scenarios, the Opteron handily bested the Xeon without even using its 64bit capabilities.

On the 8th of September in 2003, Intel announced the Pentium 4 Extreme Edition ahead of the AMD Athlon 64 that was set to launch on the 23rd. This was something of a stopgap product as Intel had been working on an implementation of AMD’s 64bit x86 extensions, but that product wasn’t yet ready. Or, at least, Intel made no 64bit x86 parts available to anyone other than IBM. The IBM eServer xSeries 306 was available with a Socket 478 Pentium 4 with 64bit extensions (IBM part number 26K8430) in 2004. At any rate, the P4EE was very similar to the Xeon MP but on Socket 478 with 2MB of L3. It was clocked at 3.4GHz, had an 800MHz FSB, and a TDP of 102.9W. While the P4EE was a good performer, it didn’t quite take the performance crown from the Athlon 64, and it ran hot.

Intel’s Xeon server market continued growing throughout 2003, and the company managed to cross the 100,000 unit mark for Itanium. XScale likewise continued to grow in market share with PXA260 having shipped in March. PXA260 was about half the physical size of Intel’s initial XScale parts and variants were available with Intel StrataFlash on-package in sizes of either either 16MB 16bit, 32MB 16bit, or 32MB 32bit. Intel was also still selling a variety of products that do not typically get too much attention: 10Gbps network adapters (PRO/10GbE LR, PRO/1000), network processors (IXP420, IXP421 and IXP422 — these largely went to Cisco), TXN18107 10-Gpbs XFP Transceiver, microcontrollers (186, 386, 486, i960 — largely sold to automotive companies), PXA800F cellular processor, D5205 TDMA (Time Division Multiple Access) Baseband Chipset, and naturally, the company continued sales of older versions of most of their products.

Up to this point in Intel’s history, the company had been the leader of the microcomputer revolution even if at first unwillingly so. They’d dropped the price of computing severely while having increased the compute power per dollar. This meant that by the early 2000s, a workstation was just a high-end PC, minicomputers were dead, and mainframes were a niche product. While Intel closed 2003 no longer being the undisputed technological leader in the microprocessor space, they remained a strong company with excellent products, and they were well positioned to reclaim their throne. They certainly had plenty of money to enable them. The company had $30 billion in revenue for 2003 and income of $5.6 billion. They had invested $16 billion in property, plants, and equipment, and $4.36 billion in R&D.

I have readers from many of the companies whose history I cover, and many of you were present for time periods I cover. A few of you are mentioned by name in my articles. All corrections to the record are welcome; feel free to leave a comment.

> Despite dire predictions and mountains of hype, the world failed to end and the Sun rose on the 1st of Janury in 2001.

What is this specifically in reference to? I can only think of Y2K around that time, but in that case the fear was about what happens moving from 1999->2000, not 2000->2001 (due to low number of significant year digits stored in systems). It definitely blends into the rest of the narrative of that year nicely, but also I think I'm missing something?